Zero-code and Serverless Llama3 Fine-tuning Powered by FEDML Nexus AI

Open-sourced Llama3 70B is wildly good: it's on par with the performance of closed-source GPT-4 in Chatbot Arena Leaderboard (as of April 20th, 2024). This provides a good opportunity for enterprises and developers to own a high-performance self-hosted LLM customized on their private data. At FEDML, we are very excited to share our zero-code and serverless platform for fine-tuning Llama3-8B/70B, which requires no strong expertise and knowledge in AI and ML Infrastructure.

Our Unique Solution

The serverless and zero-code fine-tuning capability in FEDML Nexus AI platform provides several unique benefits compared to existing solutions in the market:

- Zero-code solution allows you to focus on private data, not on training infrastructure: FEDML Nexus AI handles server management and maintenance, as well as the best practice for training Llama3. Developers just need to focus on fine-tuning the model for private data.

- Pay only for the usage (i.e., how long your fine-tuning takes): With serverless, you pay only for the resources you use. This can be more cost-effective than maintaining dedicated hardware or renting GPUs from major clouds, especially for tasks like model fine-tuning that don't run continuously.

- Scalability accelerates your exploration: Serverless computing can automatically adjust computing resources based on the application's needs. This is particularly beneficial for fine-tuning large language models like Llama3-8B/70B which may require multiple GPUs. In addition, it allows developers to simultaneously run multiple runs with different hyperparameters.

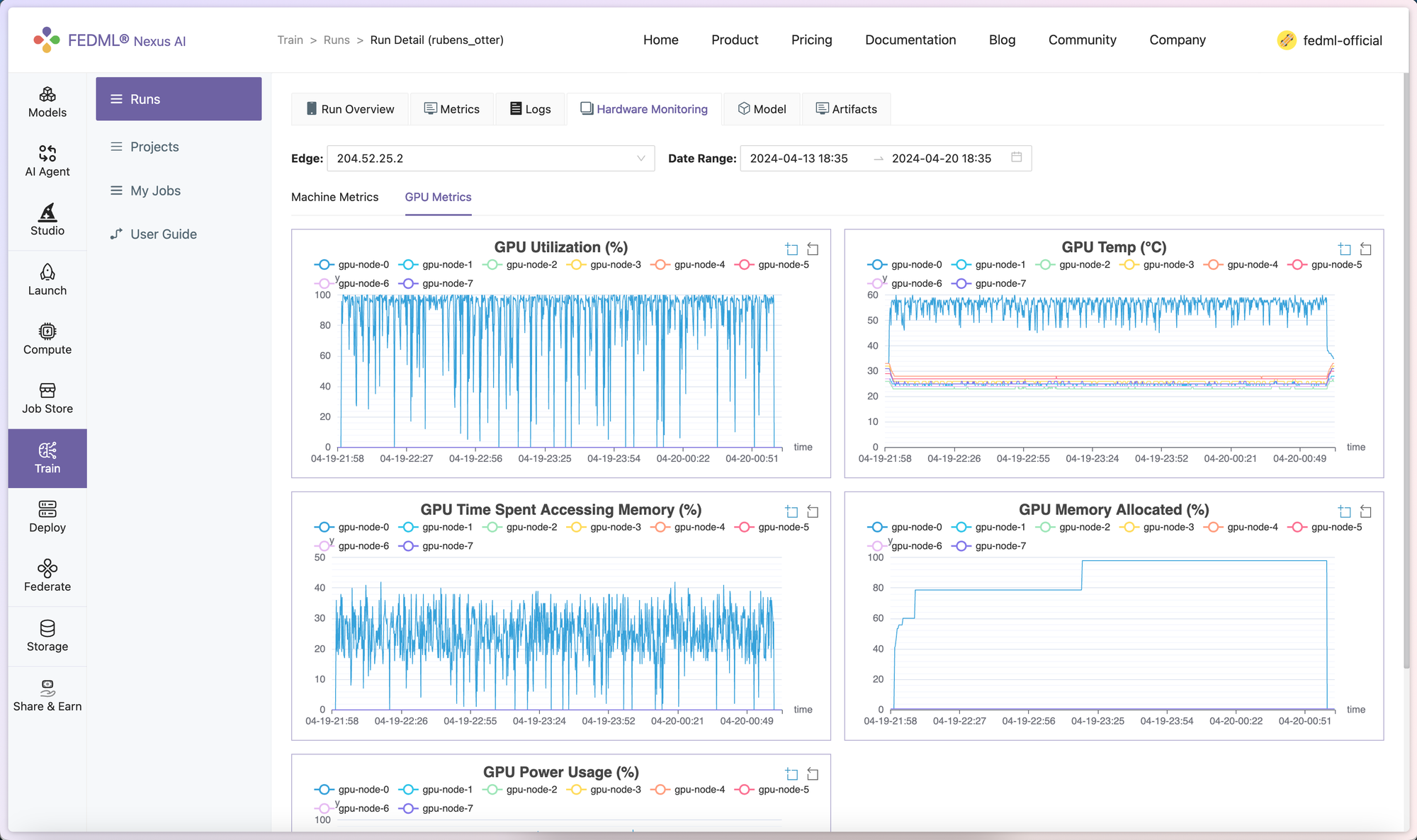

- Detailed experimental tracking: we provide you detailed experimental tracking capability to see metrics, logs, and training costs during your training. This is provided all in one place, so you don't need to switch back and forth between other AI platforms.

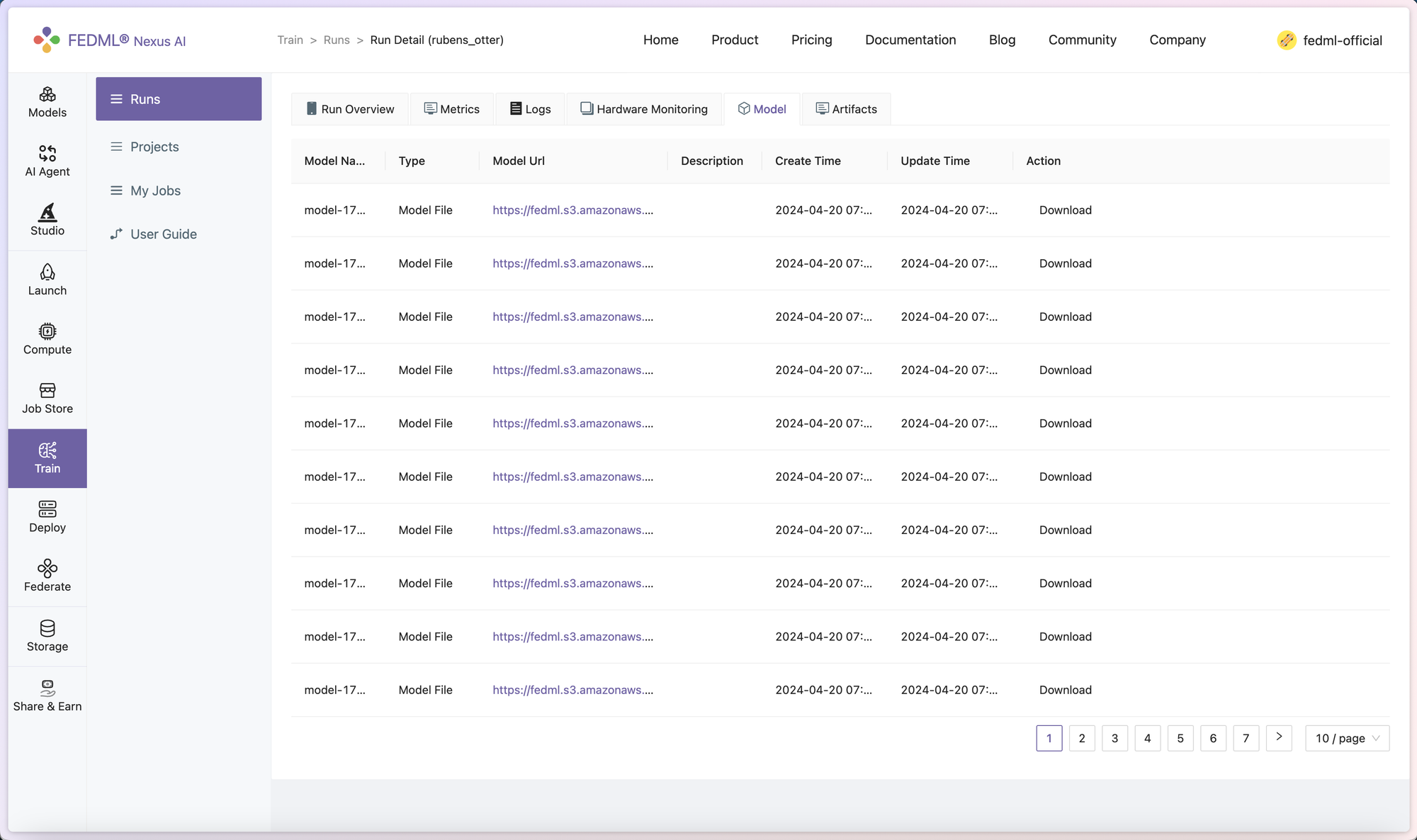

- Fast model deployment: Once the fine-tuning is finished, at FEDML Nexus AI, you can use a few clicks to finish the deployment and turn your fine-tuned models into playground, APIs, and even AI Agents.

Now, let's get started to learn how easy it is to fine-tune Llama3 on the FEDML Nexus AI Platform.

Start Fine-tuning with Only One Click

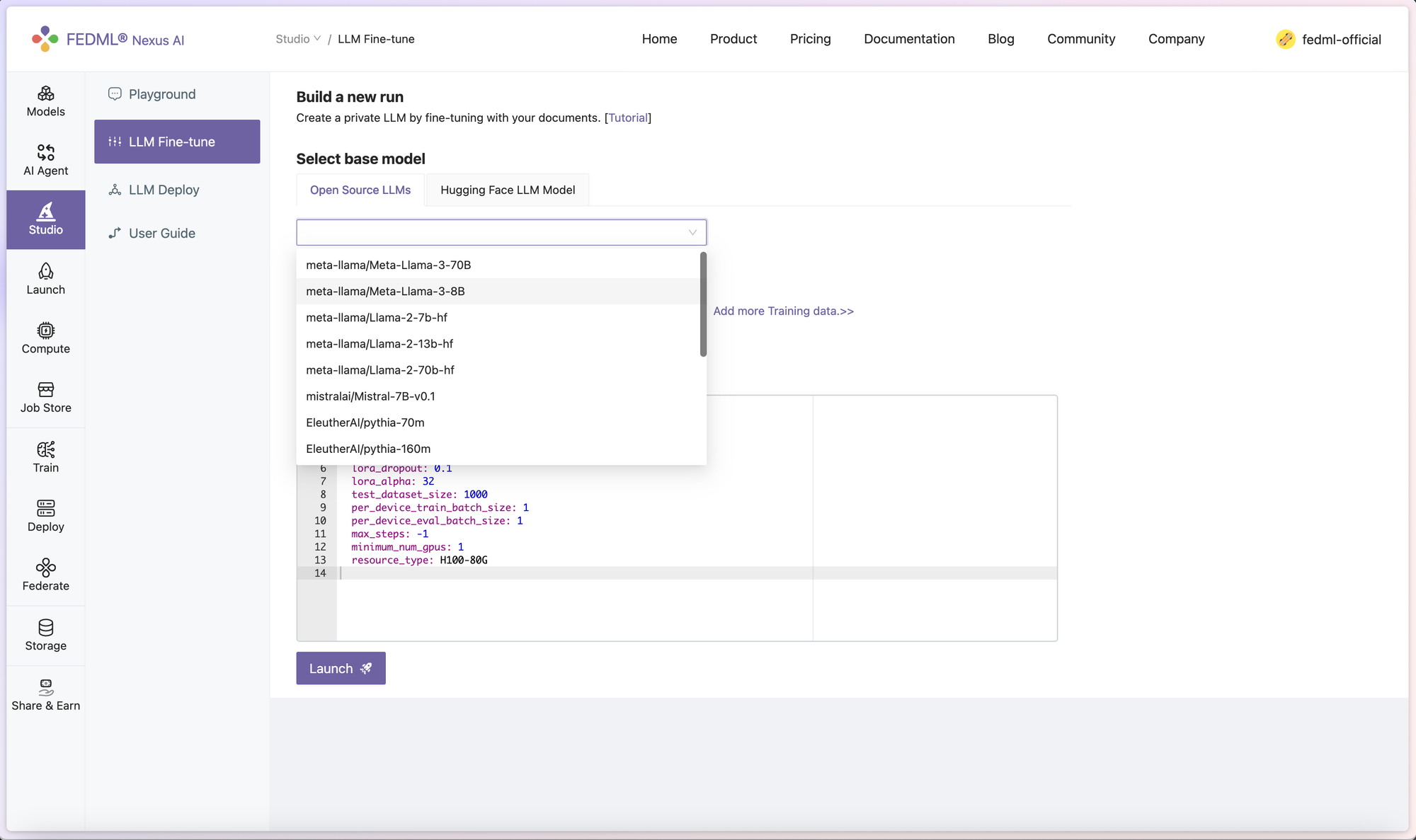

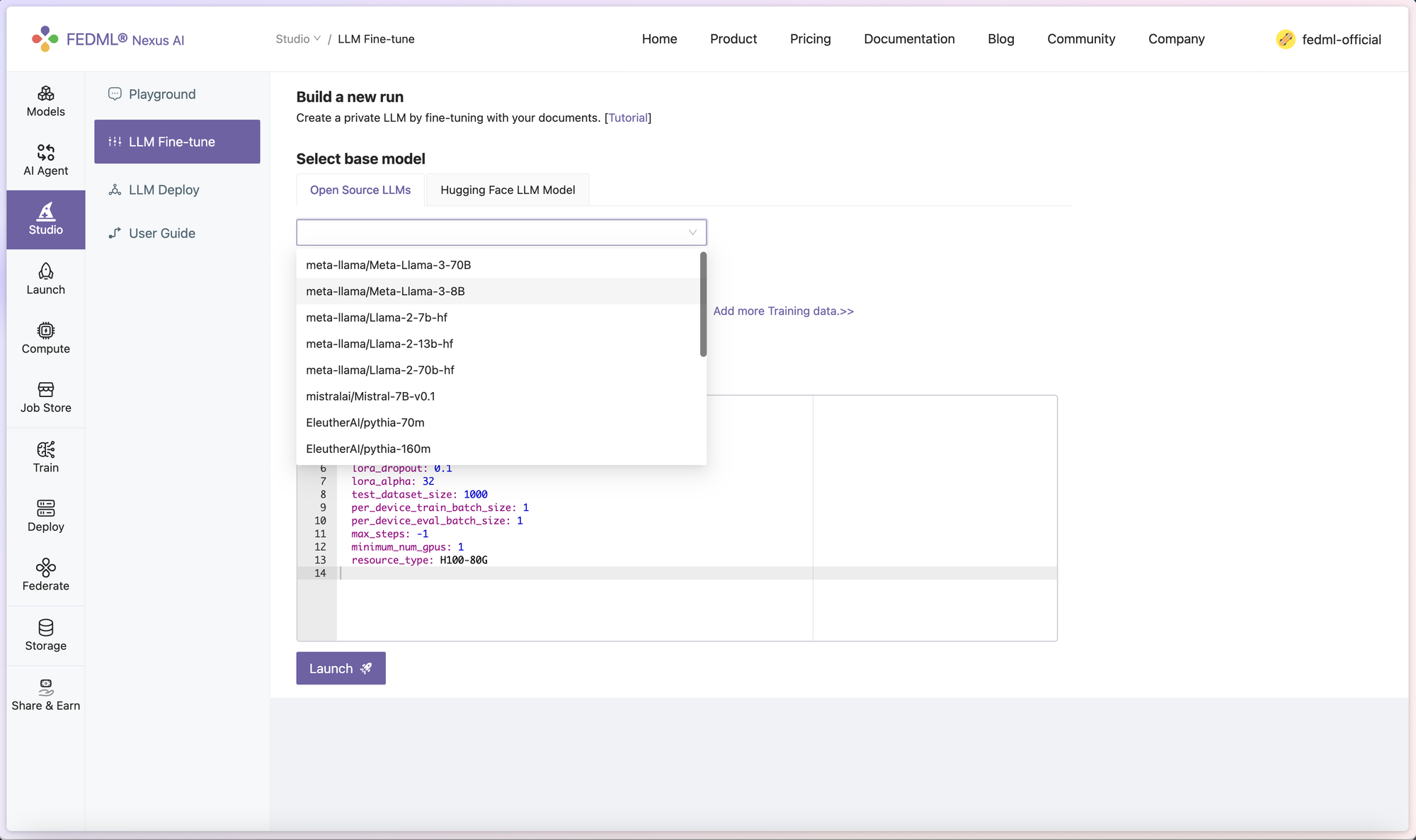

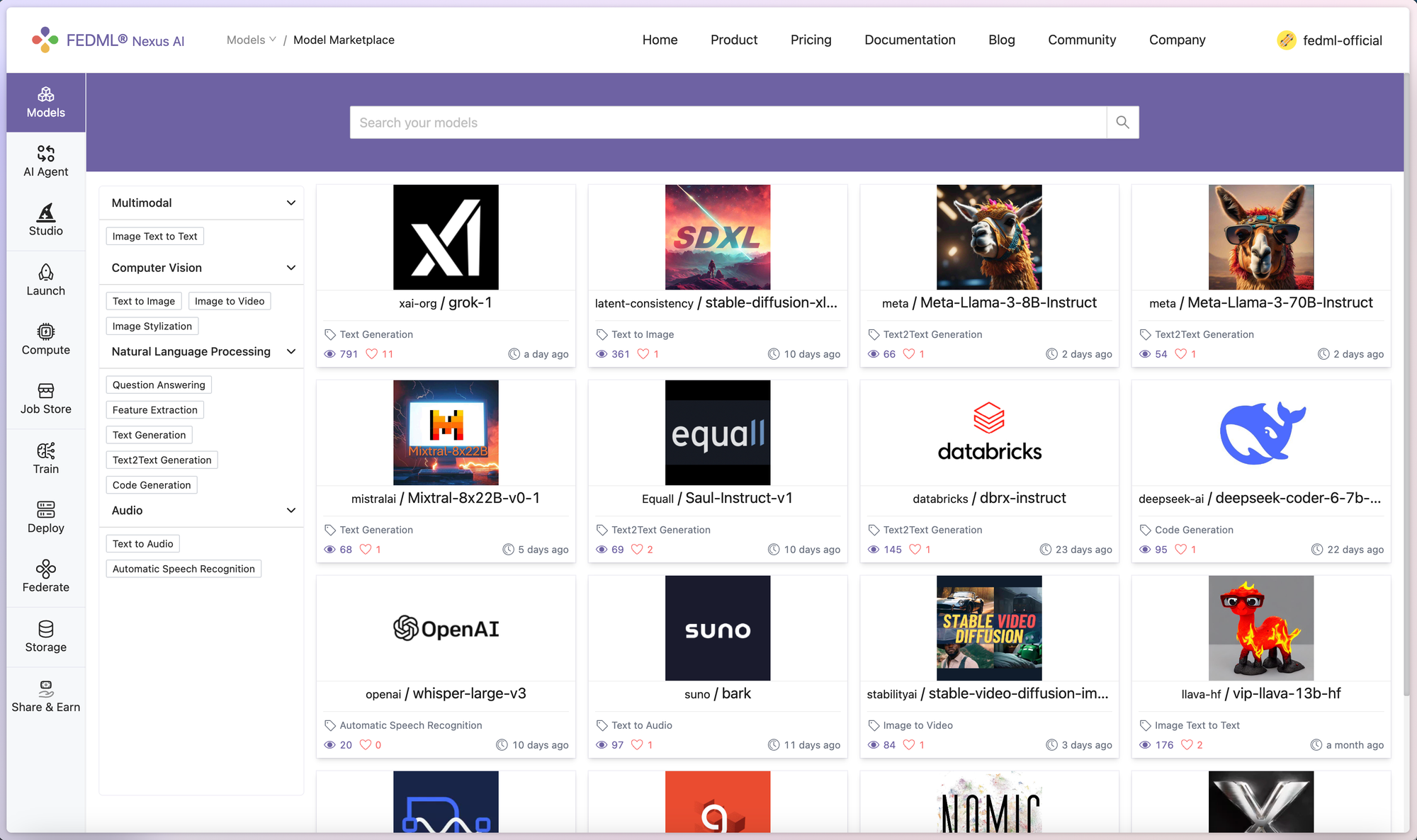

You just need to log in to https://fedml.ai, switch to Studio - LLM Fine-tune, select the base model as Llama3-8B or Llama3-70B, select the prebuilt dataset, and then click "Launch".

Prepare Your Own Training Data

There are two ways to prepare the training data.

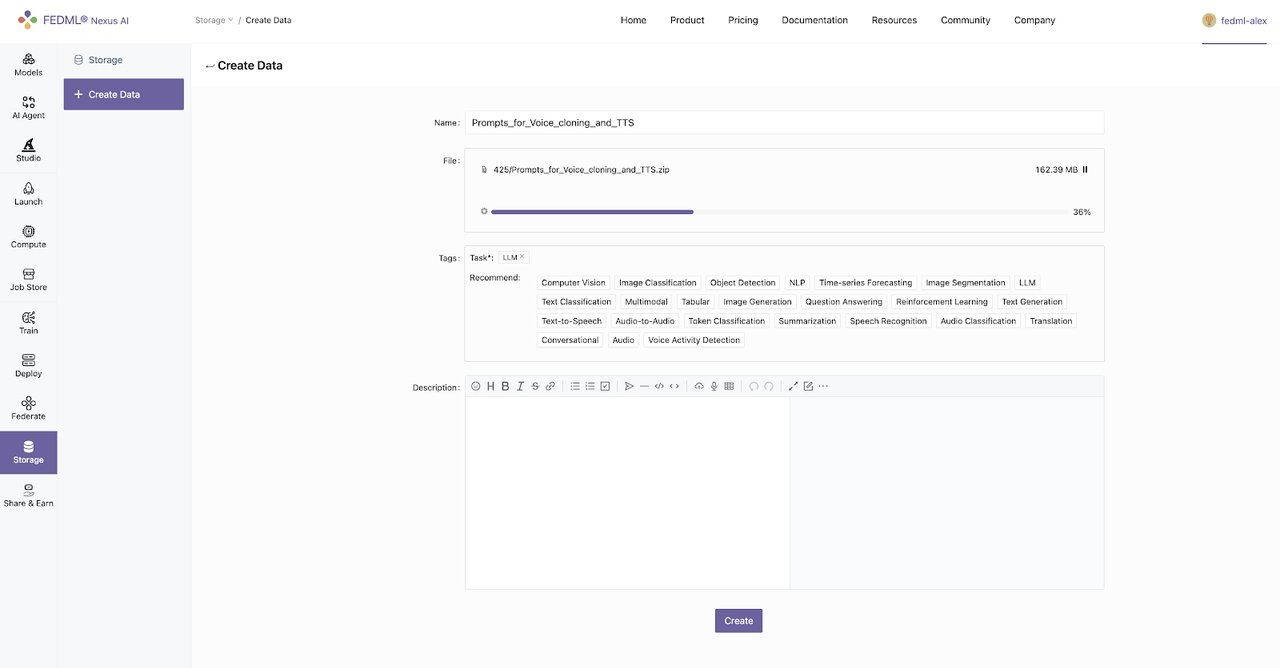

- Customized training data can be uploaded through the storage dashboard

- You can also use Data Upload CLI:

fedml.api.storage

fedml storage upload '/path/Prompts_for_Voice_cloning_and_TTS'Uploading Package to Remote Storage: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 42.0M/42.0M [00:36<00:00, 1.15MB/s]Data uploaded successfully. | url: (https://03aa47c68e20656e11ca9e0765c6bc1f.r2.cloudflarestorage.com/fedml/3631/Prompts_for_Voice_cloning_and_TTS.zip?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=52d6cf37c034a6f4ae68d577a6c0cd61%2F20240307%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20240307T202738Z&X-Amz-Expires=604800&X-Amz-SignedHeaders=host&X-Amz-Signature=bccabd11df98004490672222390b2793327f733813ac2d4fac4d263d50516947)Format for Custom Dataset

FEDML currently supports files in JSON Lines format. In JSON lines files (usually ends with .jsonl), each line contains a JSON object.

(1) Dolly-style: each sample is a dictionary with the following format

instruction: str # question/instructioncontext: str # can be emptyresponse: str # expected outputExample:

{"instruction": "When did Virgin Australia start operating?", "context": "Virgin Australia, the trading name of Virgin Australia Airlines Pty Ltd, is an Australian-based airline. It is the largest airline by fleet size to use the Virgin brand. It commenced services on 31 August 2000 as Virgin Blue, with two aircraft on a single route. It suddenly found itself as a major airline in Australia's domestic market after the collapse of Ansett Australia in September 2001. The airline has since grown to directly serve 32 cities in Australia, from hubs in Brisbane, Melbourne and Sydney.", "response": "Virgin Australia commenced services on 31 August 2000 as Virgin Blue, with two aircraft on a single route."}{"instruction": "Which is a species of fish? Tope or Rope", "context": "", "response": "Tope"}

{"instruction": "Why can camels survive for long without water?", "context": "", "response": "Camels use the fat in their humps to keep them filled with energy and hydration for long periods of time."}{"instruction": "Alice's parents have three daughters: Amy, Jessy, and what's the name of the third daughter?", "context": "", "response": "The name of the third daughter is Alice"}...(2) Text only: each sample is a dictionary with the following format

text: str # contains the entire text sampleExample:

{"text": "When did Virgin Australia start operating?\nVirgin Australia, the trading name of Virgin Australia Airlines Pty Ltd, is an Australian-based airline. It is the largest airline by fleet size to use the Virgin brand. It commenced services on 31 August 2000 as Virgin Blue, with two aircraft on a single route. It suddenly found itself as a major airline in Australia's domestic market after the collapse of Ansett Australia in September 2001. The airline has since grown to directly serve 32 cities in Australia, from hubs in Brisbane, Melbourne and Sydney.\nVirgin Australia commenced services on 31 August 2000 as Virgin Blue, with two aircraft on a single route."}

{"text": "Which is a species of fish? Tope or Rope\nTope"}...Hyperparameter Setting (Optional)

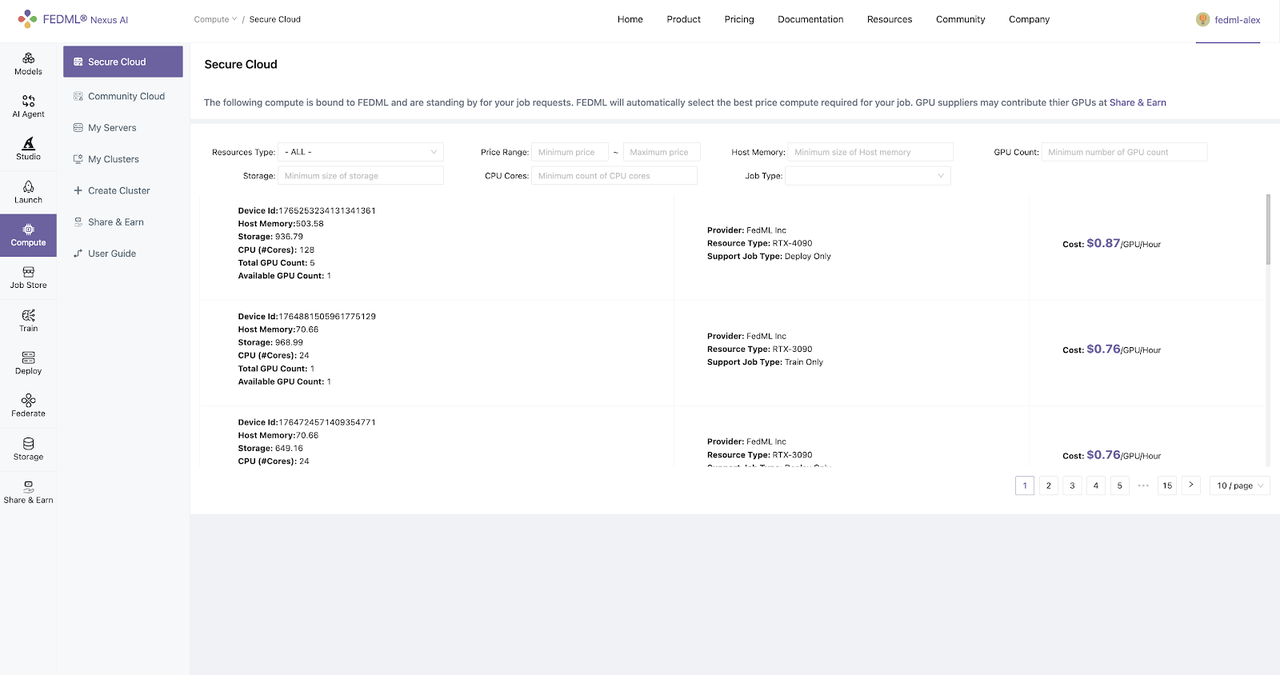

By default, we set the GPUs used for fine-tuning as H100-80G, you can also pick other resource you like by setting the resource_type argument. Its value can be found at https://fedml.ai/compute/secure (Compute - Secure Cloud).

For other hyperparameters related to training optimizers, we don't suggest you change it unless you are an expert in Llama3 training:-)

Please provide feedback by Community, and let us know if you need further help from our ML team.

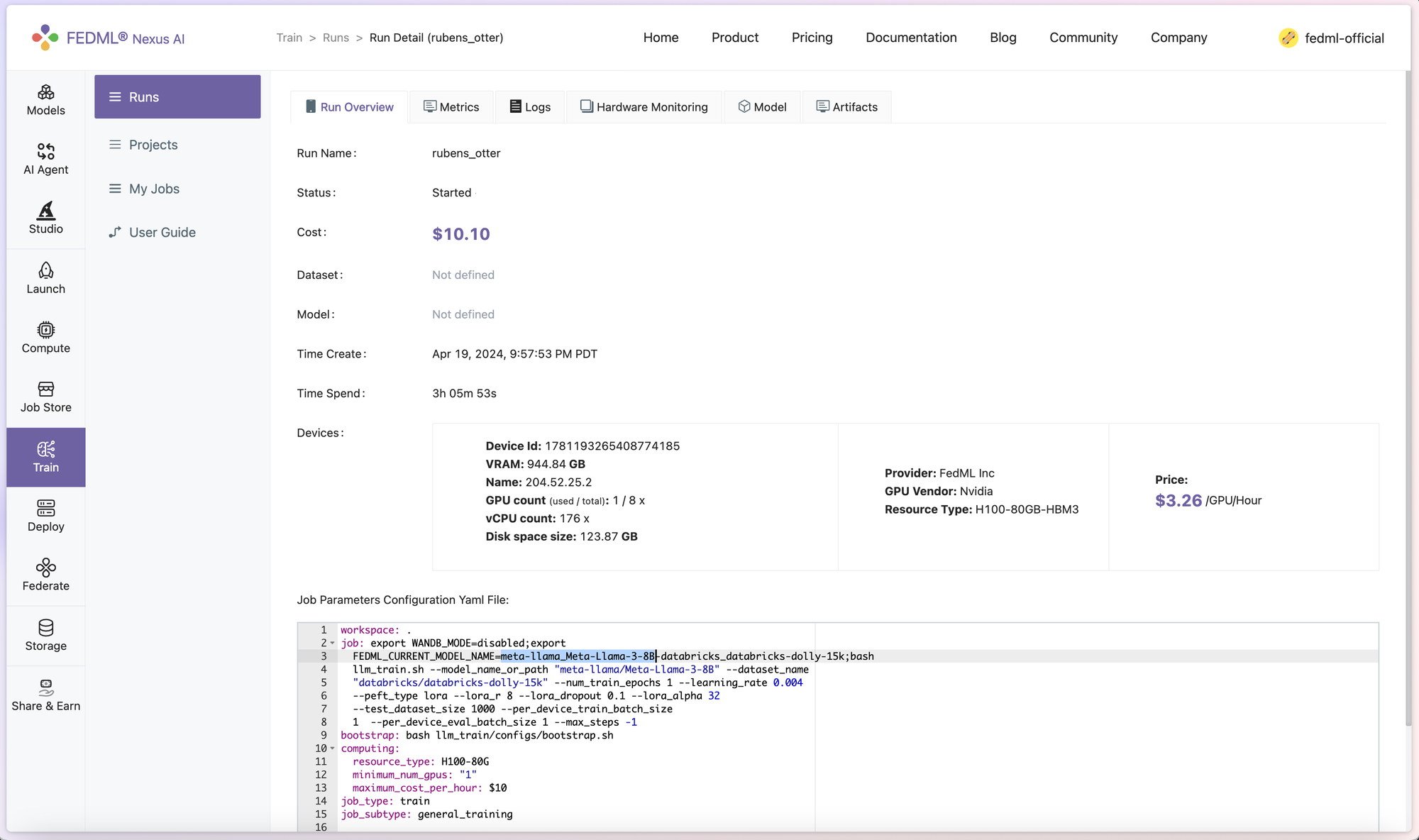

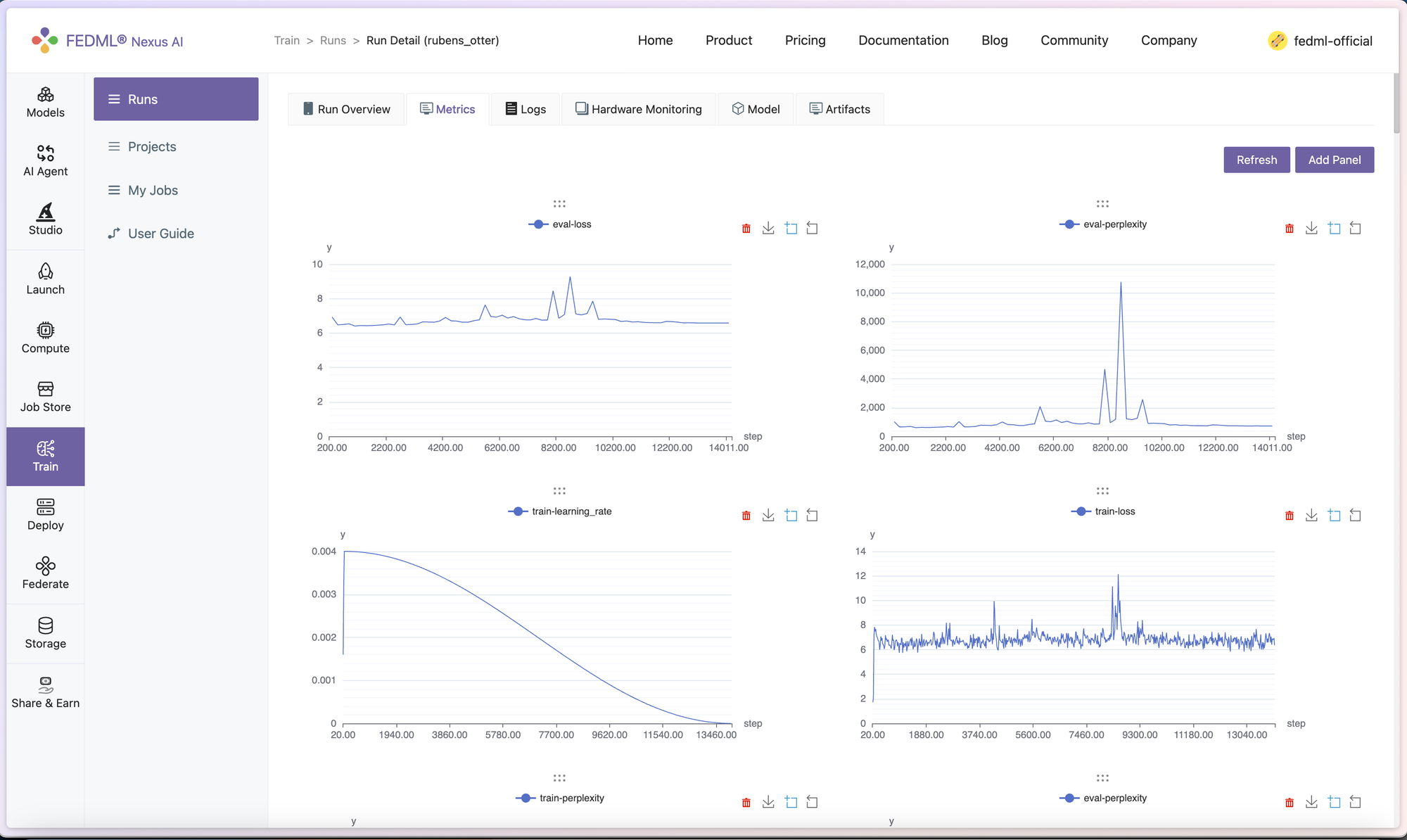

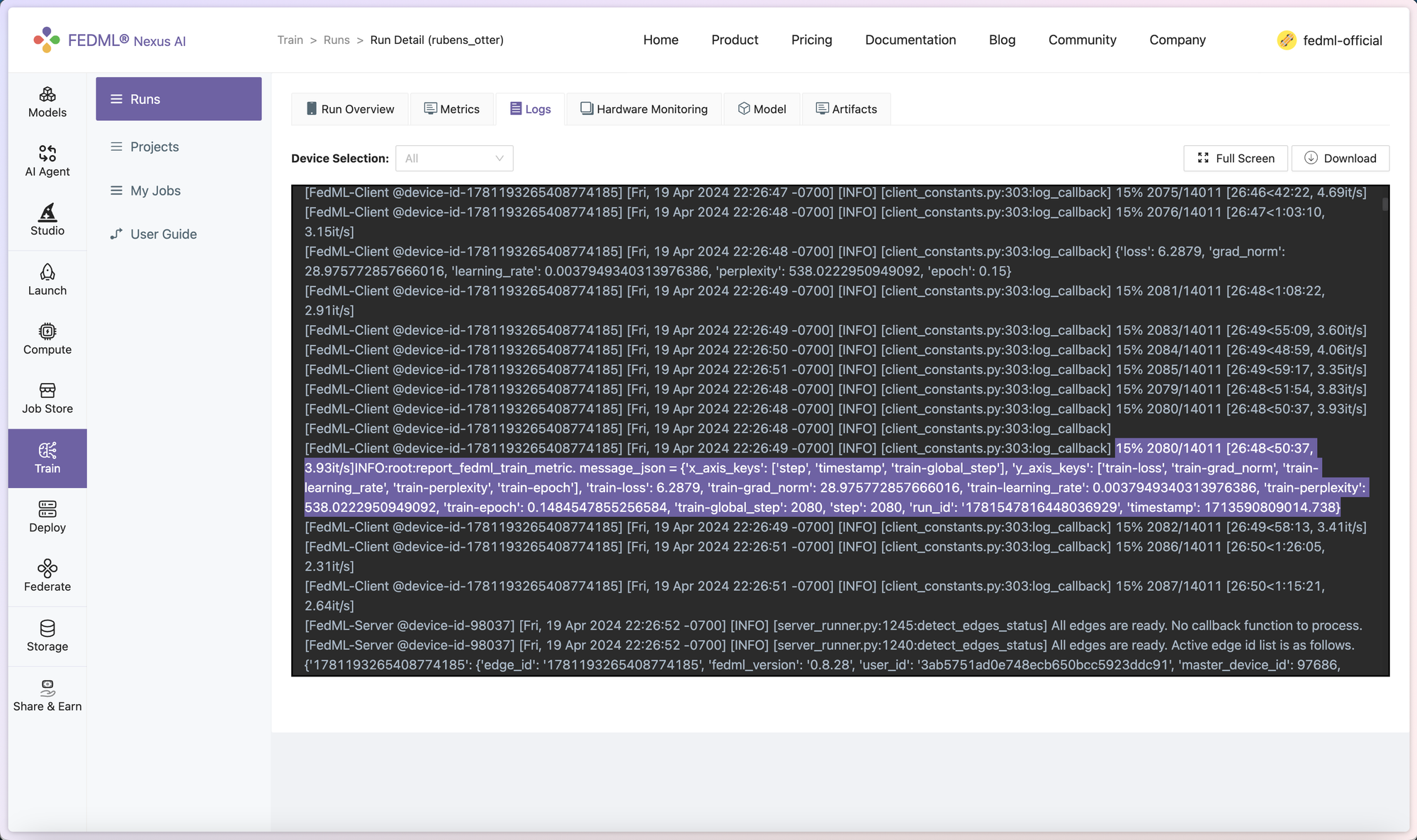

Experimental Tracking

We also provide you the seamless user experience in experimental tracking, you don't need to switch to another AI platform to track run overview, metrics, logs, hardware, and model artifacts. The prebuilt Llama3 training job uses our API fedml.log() for tracking the experiments (https://doc.fedml.ai/open-source/api/api-experimental-tracking).

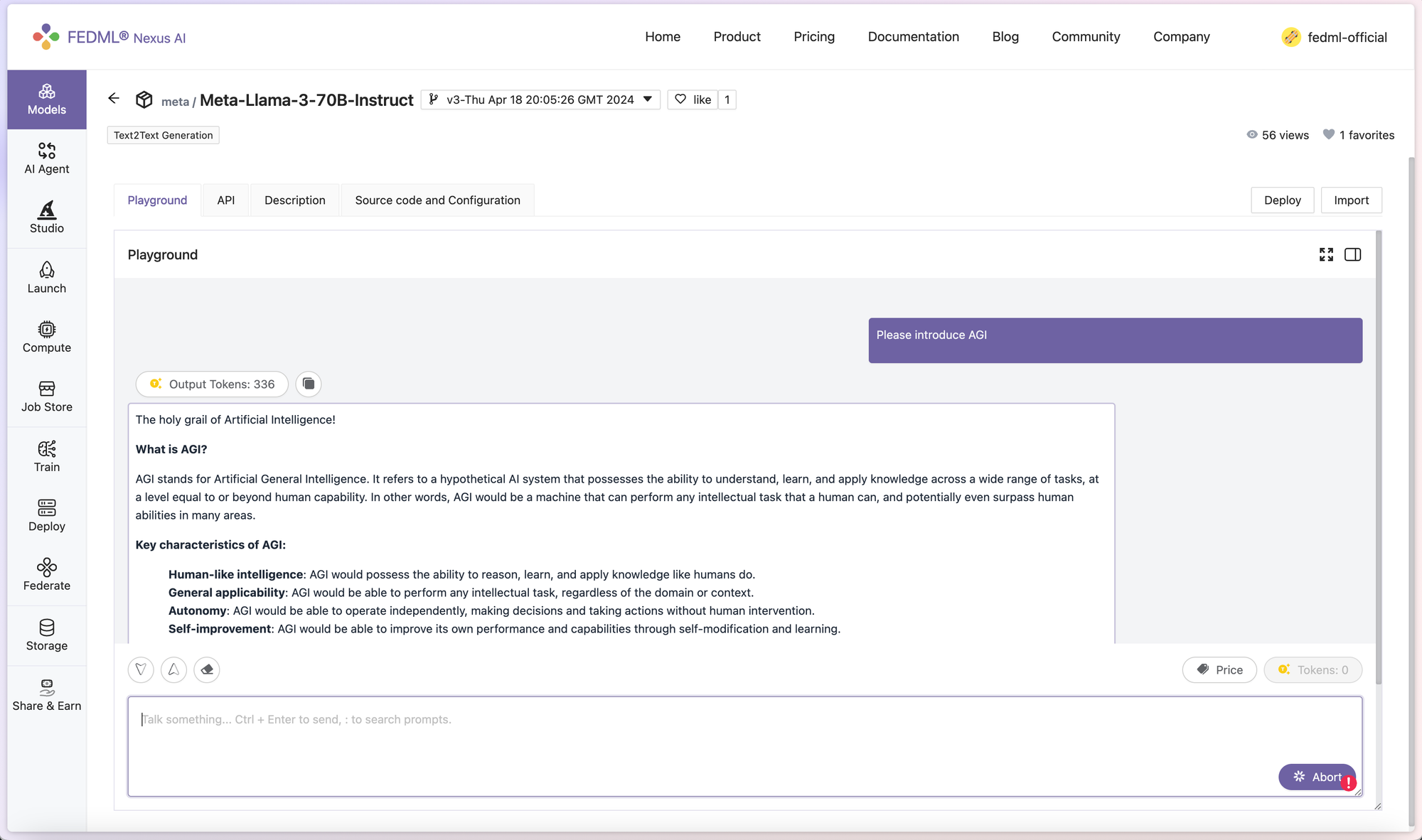

Model Deployment

Once the training is finished, we can quickly create the model endpoint on FEDML Nexus AI and try the model with Playground or API calls. More introduction can be found at: https://blog.fedml.ai/scalable-model-deployment-and-serving-platform-at-fedml-nexus-ai/

Here is the default Llama3-70B: https://fedml.ai/models/1047?owner=meta. Please give it a try.

About FEDML, Inc.

FEDML is your generative AI platform at scale to enable developers and enterprises to build and commercialize their own generative AI applications easily, scalably, and economically. Its flagship product, FEDML Nexus AI, provides unique features in enterprise AI platforms, model deployment, model serving, AI agent APIs, launching training/Inference jobs on serverless/decentralized GPU cloud, experimental tracking for distributed training, federated learning, security, and privacy.