Fast and Scalable AI Agents with Groq LPU and FEDML Nexus AI

FEDML, "Your Generative AI Platform at Scale", has announced a collaboration with Groq, the inventor of LPU™ (Language Process Unit) Inference Engine tailored for high-speed efficient inferencing of large ML models, introducing a beta release of Groq LPU on the FEDML Nexus AI platform. This collaboration broadens the application of Groq LPU and paves the way for creating potent AI Agents demanding real-time performance. Developers can plug the FEDML-Groq API into scalable AI Agents supported by FEDML's own distributed vector database framework and remote function calling. We explain how this integration works to make AI agents fast and scalable. Moreover, we also introduce advanced features that developers can benefit from FEDML Nexus AI's cross-cloud/decentralized model service platform with detailed endpoint observability tools to track, assess, and enhance the performance of their APIs. This collaboration has bridged the gap between LPU hardware and AI application developers, allowing app developers to have turn-key solutions to leverage the world’s fastest inference service for application innovation.

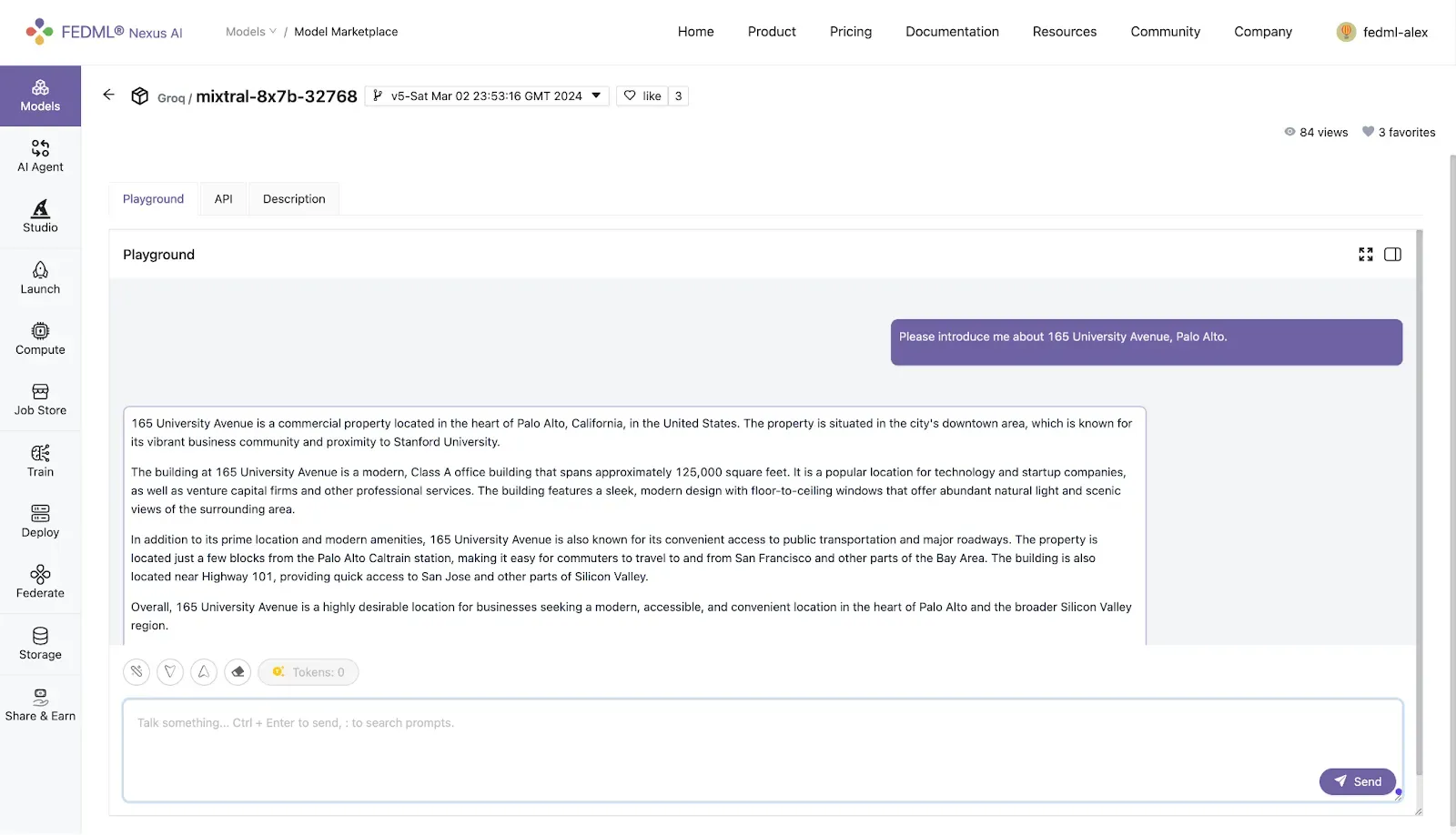

In this initial offering, FEDML-Groq API for Mistral’s Mixtral, 8x7B SMoE (32K Context Length) achieving a whopping speed of 480 tokens/s is released on FEDML Nexus AI.

Please visit https://fedml.ai/models/708?owner=Groq to get the access.

- Why Integrate Groq’s High-speed APIs?

- How to use FEDML-Groq API?

- Groq’s API Performance Monitoring

- How Does the FEDML-Groq Integration Work?

- Further Advances: Customize, Deploy, and Scale

Why Integrate Groq's High-speed APIs?

Combining Mixtral 8x7B model’s performance with Groq's rapid API access on the FEDML Nexus AI platform opens up an exciting realm of possibilities for AI Agent creation, promising transformative applications across various sectors:

- Healthcare: Utilize AI agents to shift through extensive medical records swiftly and accurately, enhancing diagnosis and patient care.

- Finance: Deploy AI Agents for instantaneous transaction analysis to detect and thwart fraud in real time or to power sophisticated, high-frequency trading algorithms.

- Smart City and Mobility: Implement AI Agents to dynamically manage traffic, improving urban mobility and alleviating congestion through real-time data processing.

- Advertising: Create AI Agents for streaming platforms that adapt ad content in response to viewer interactions, dramatically boosting engagement and ROI for advertisers.

- Supply Chain Management: Use AI Agents to proactively address supply chain challenges, analyzing diverse data sources like weather, global events, and logistics to predict disruptions and adapt operations seamlessly.

How to use FEDML-Groq API?

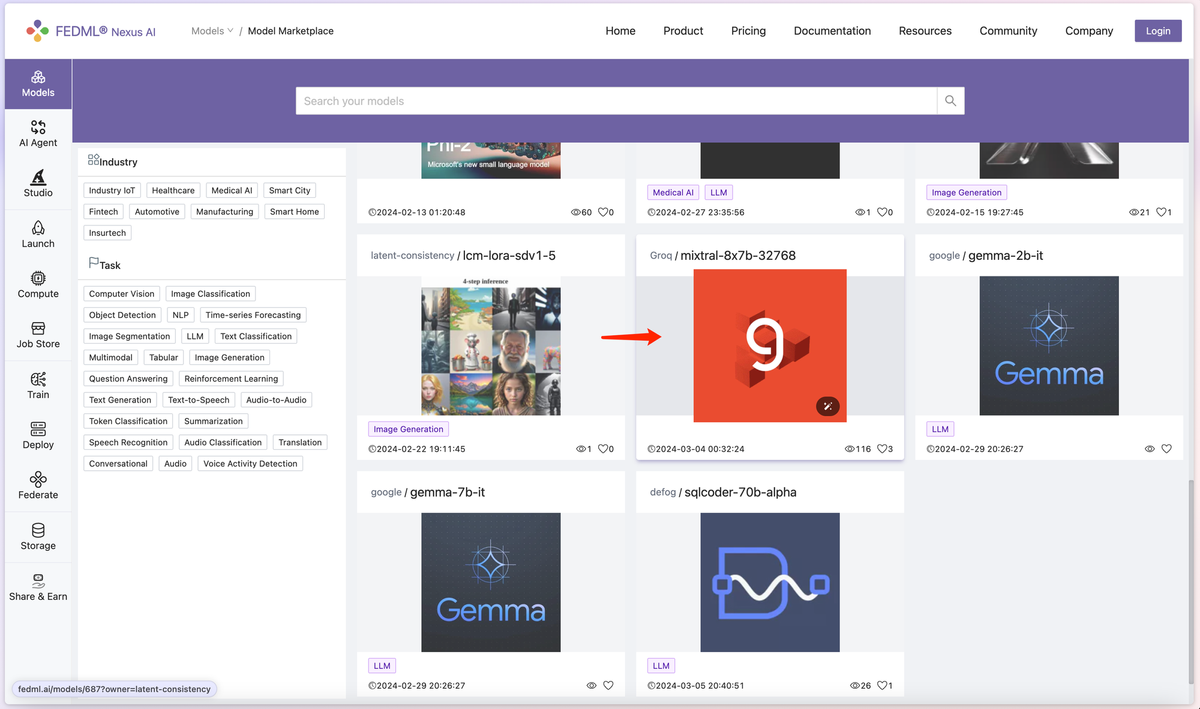

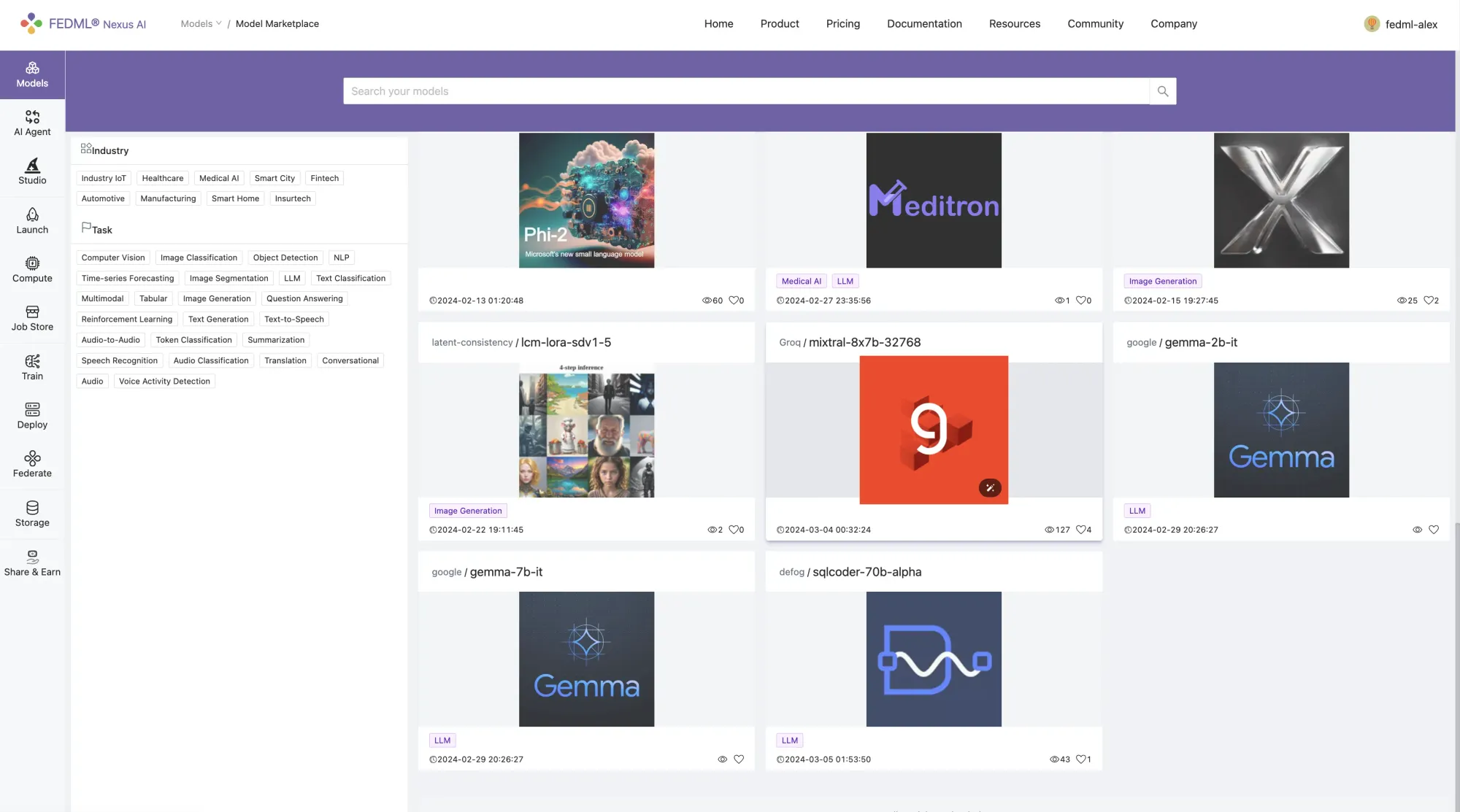

AI developers can start using FEDML-Groq API for Mixtral 8x7B in just three easy steps:

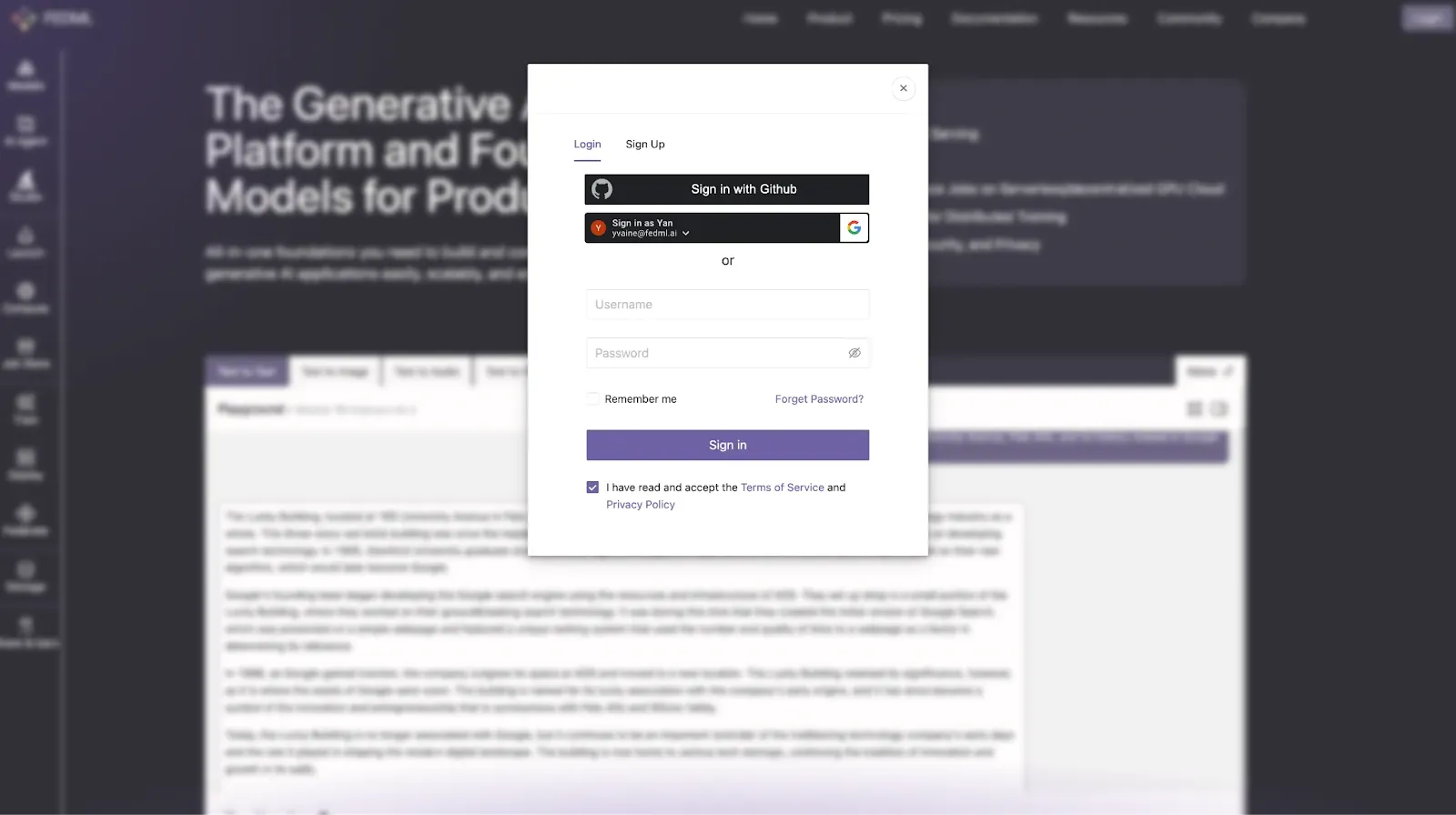

- Log in to FEDML Nexus AI platform.

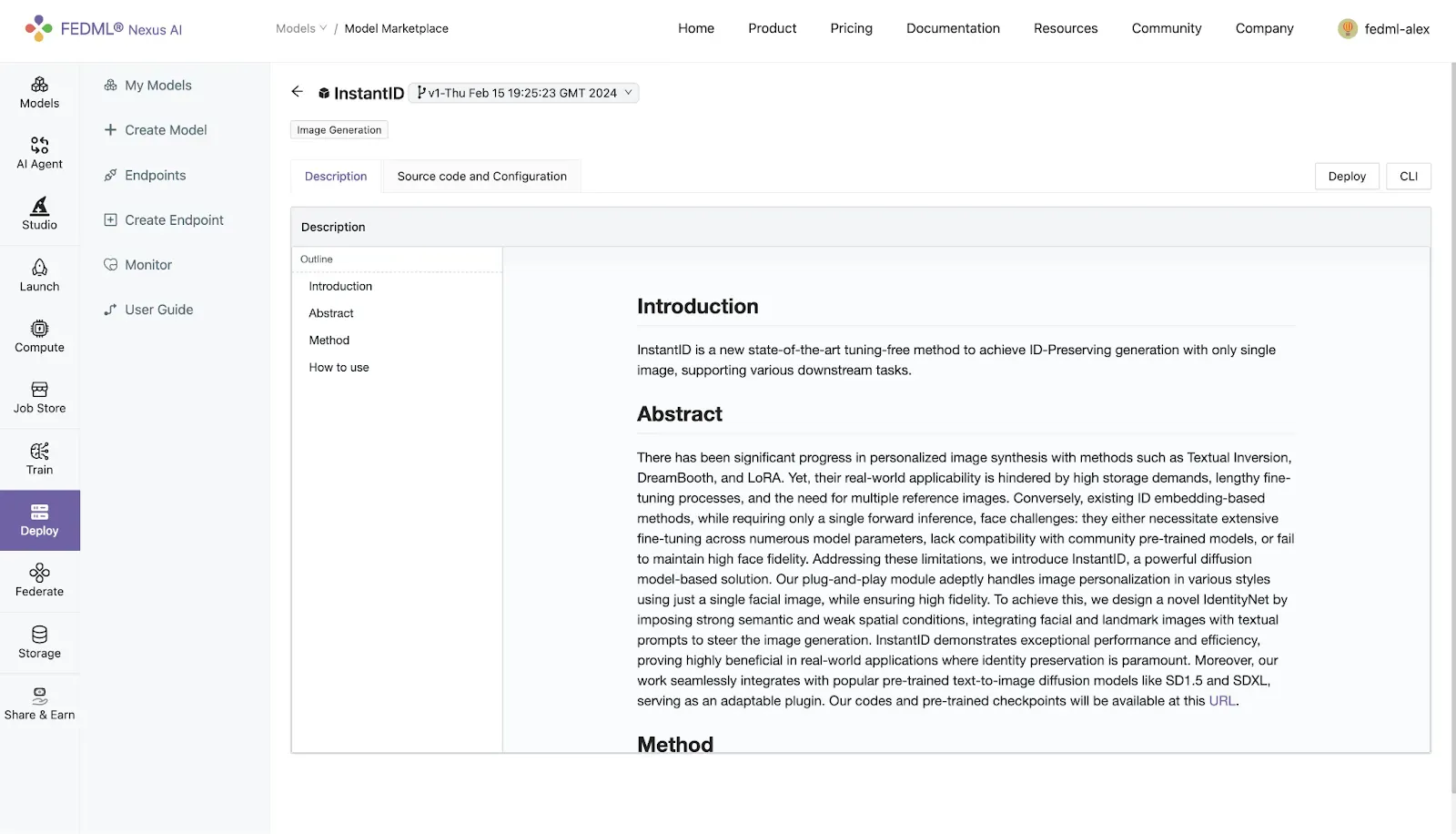

- Navigate to Models -> Model Marketplace: Groq/mixtral-8x7b-32768

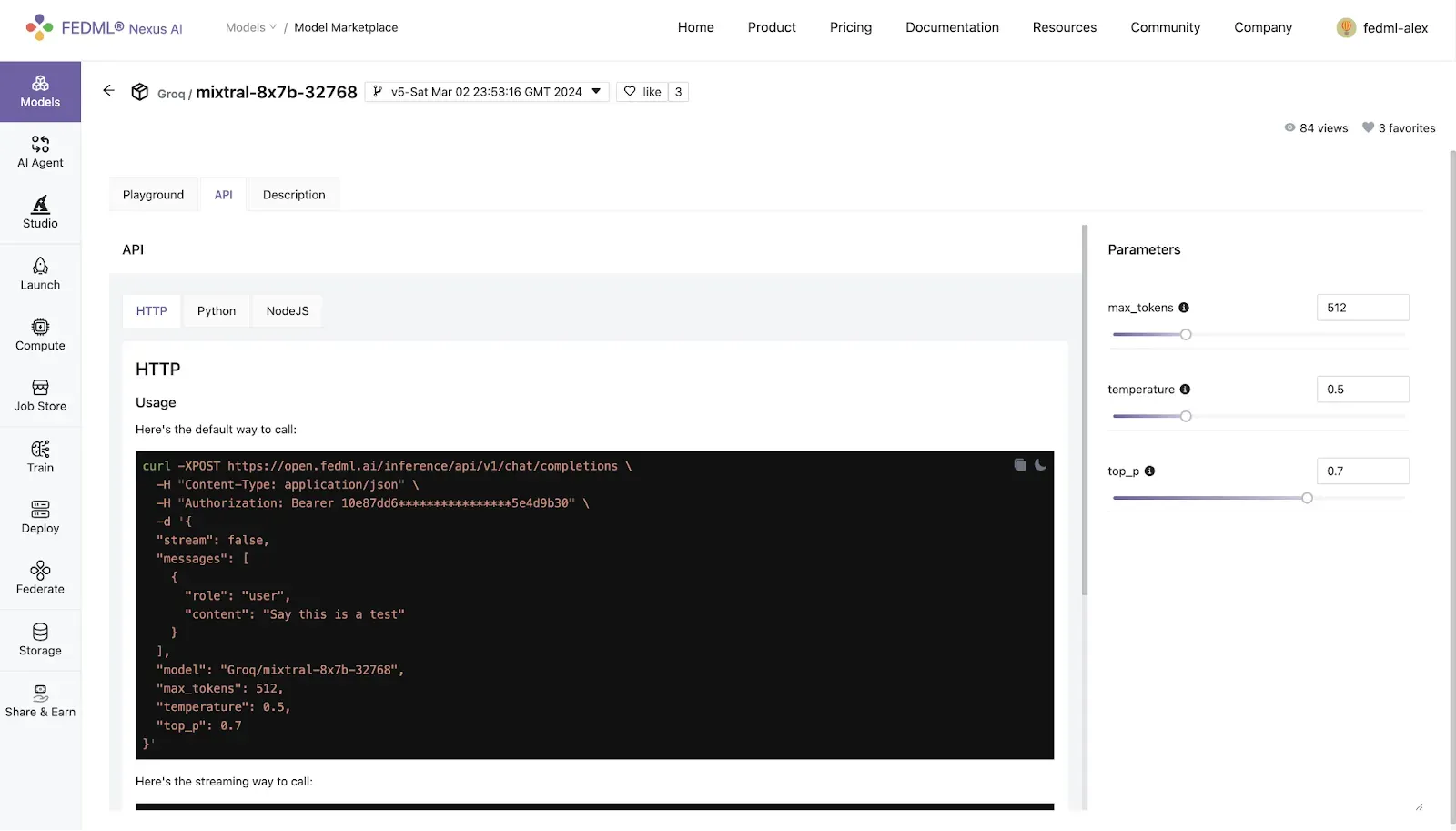

- Explore Through the Model Playground and API

Developers can use the provided API or the model playground to see how quickly it responds. This interactive environment lets developers explore its capabilities and potential applications.

How to build AI Agents with FEDML-Groq API?

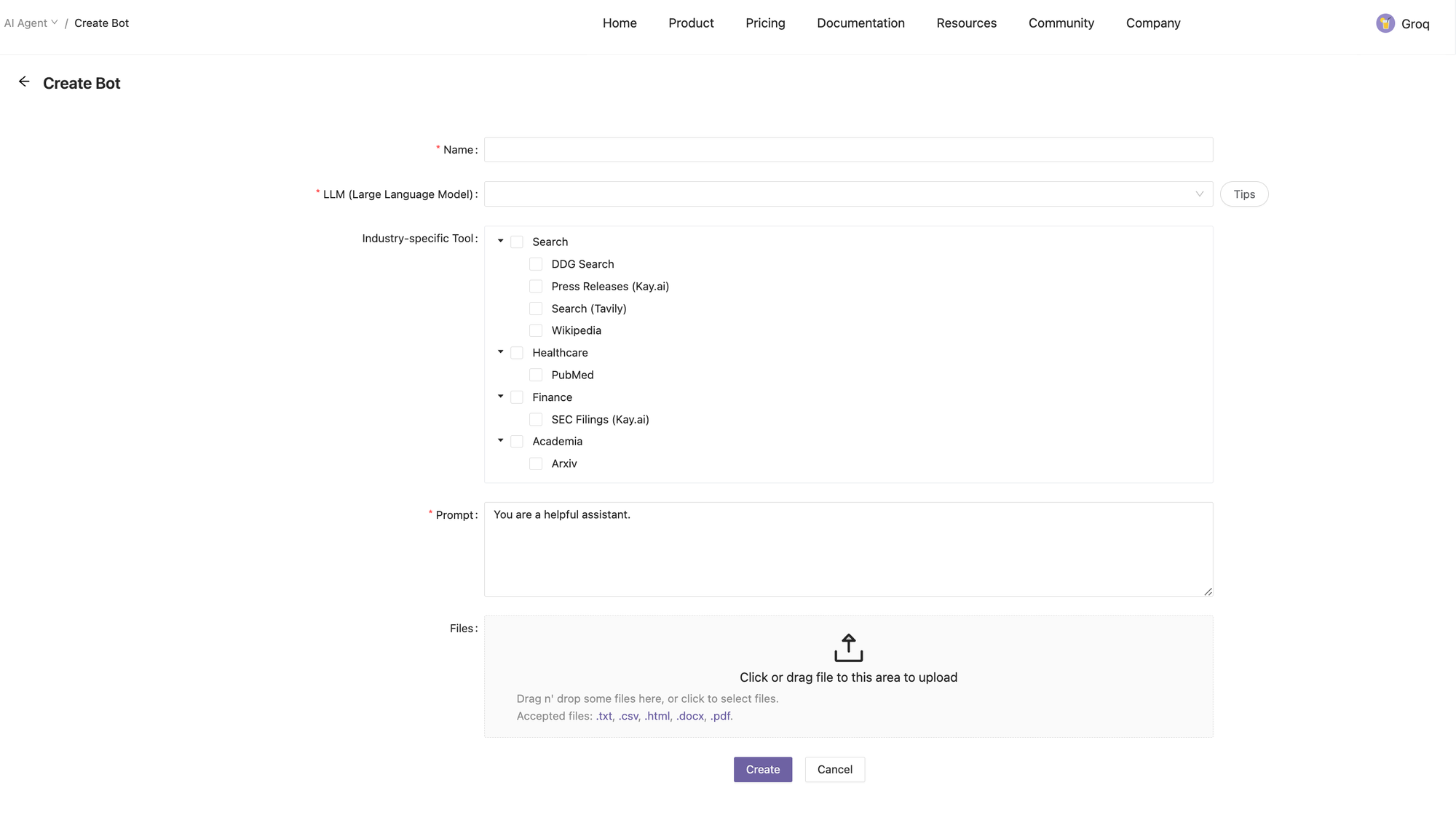

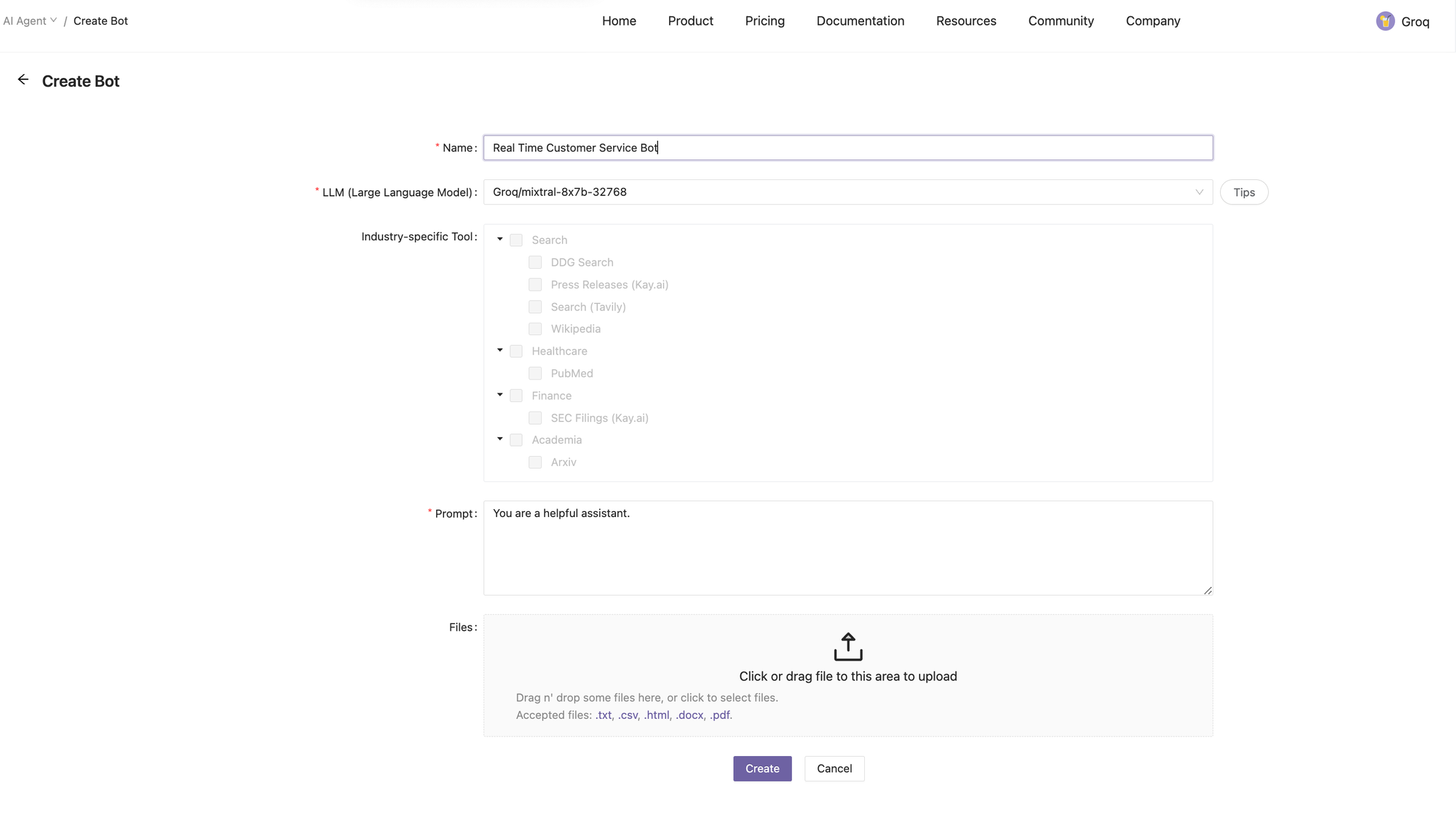

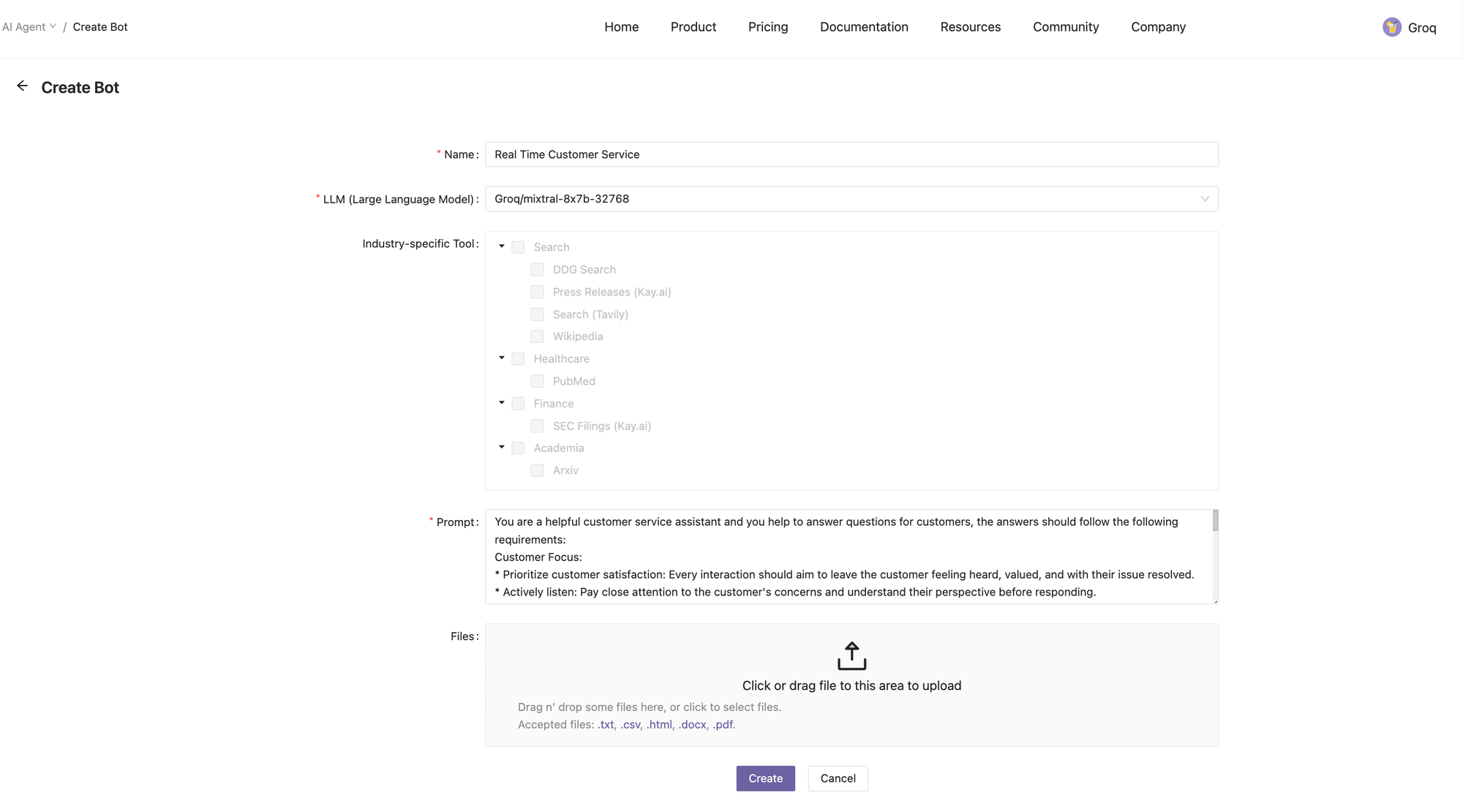

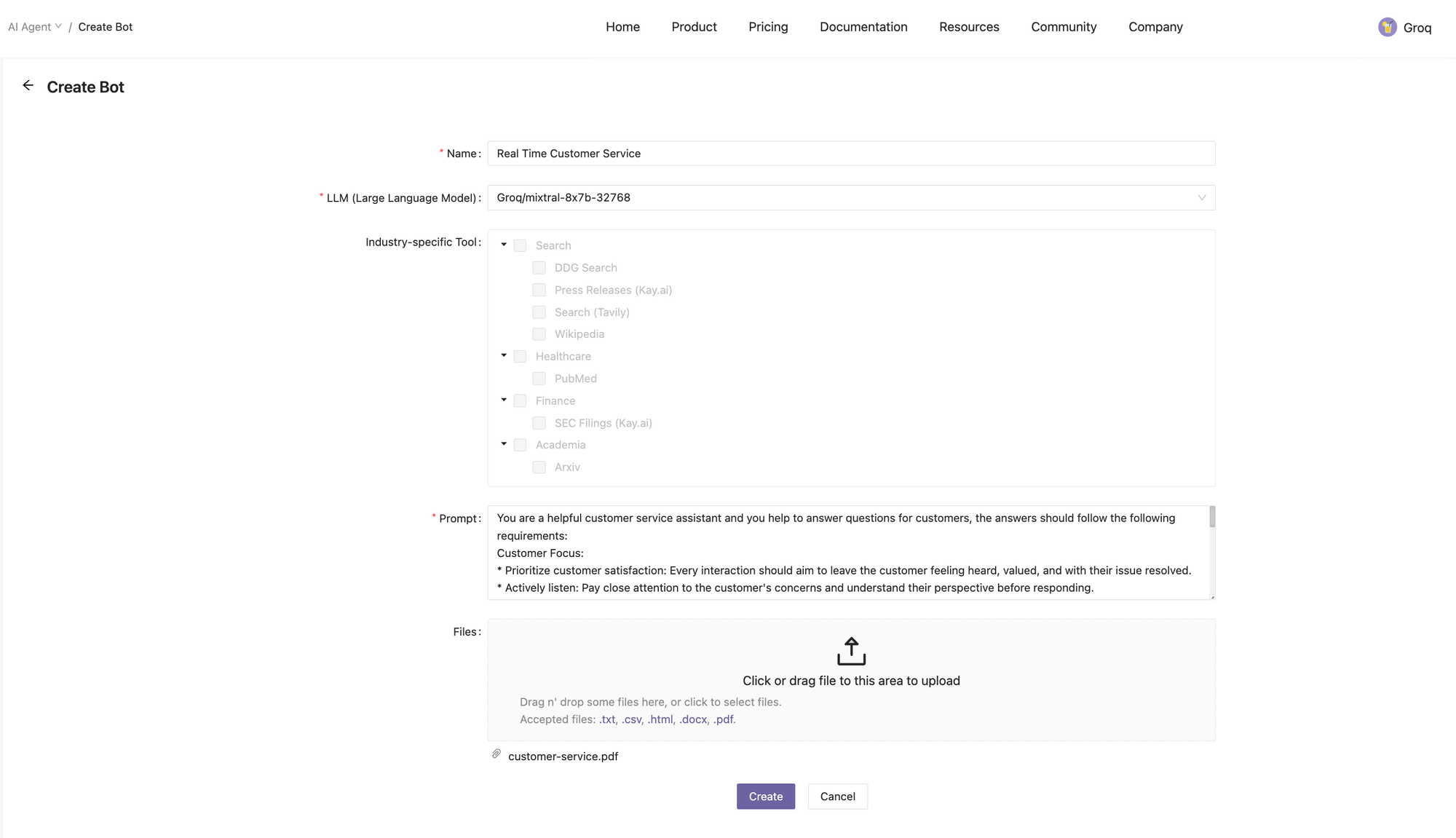

Besides FEDML-Groq model serving API, FEDML Nexus AI further enables LLM-AI developers build extremely fast AI agents using FEDML-Groq API. The workflow for creating AI agents is as follows.

- Navigate to AI Agents and click Create Bot.

- Name the agent and select the Groq/mixtral-8x7b model

- (Optional) Select Industry-specific Tool, such as PubMed to empower your search using PubMed's search engine's capabilities.

- Provide Prompt for the agent (e.g., "real time customer service requirement").

- Upload file, like a PDF document and generate the agent in a single click.

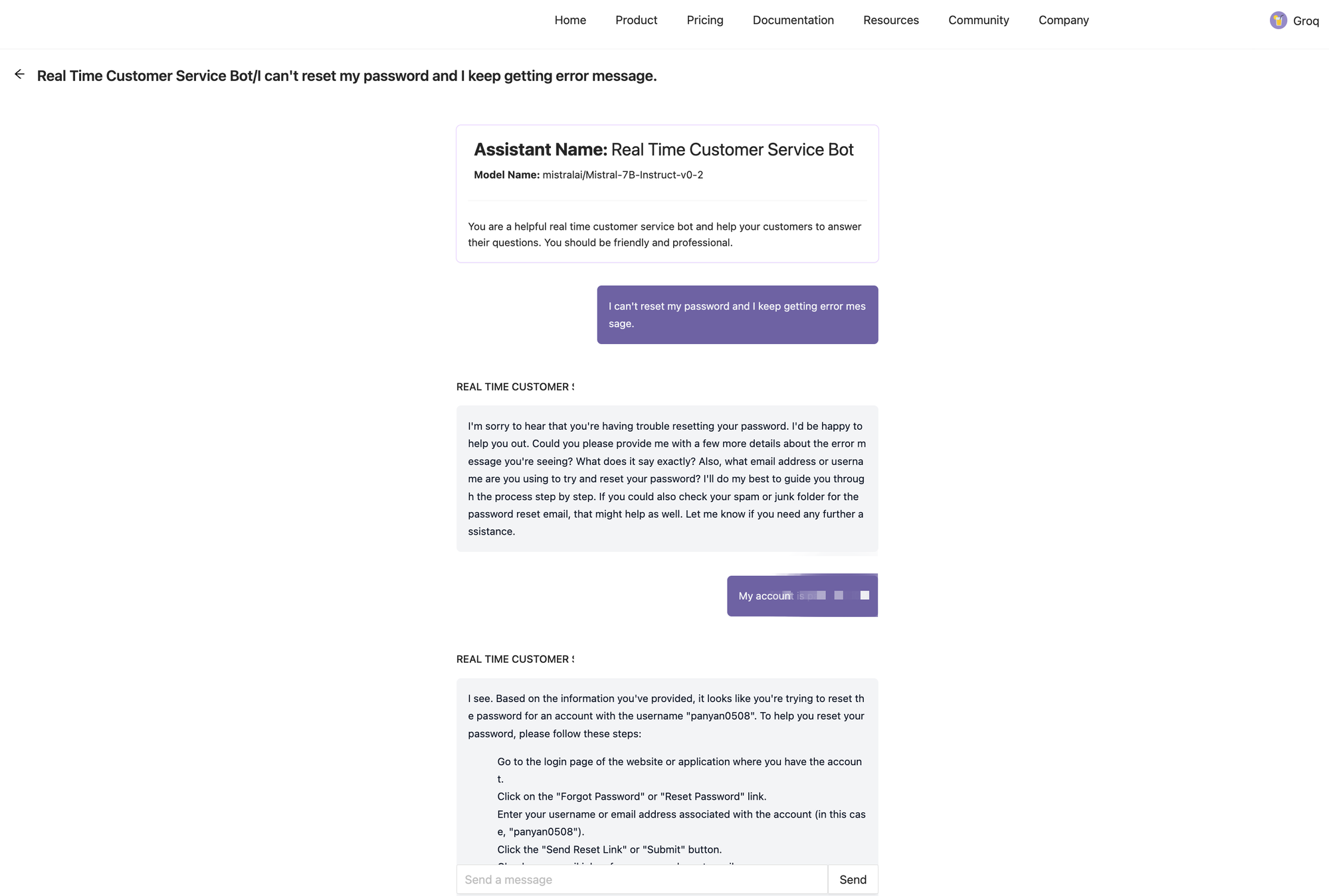

- See Groq's blazing-fast speed in action! Chat with the agent, ask questions, give it tasks, and get instant results.

Demo

Groq API Demo

Groq’s API Performance Monitoring

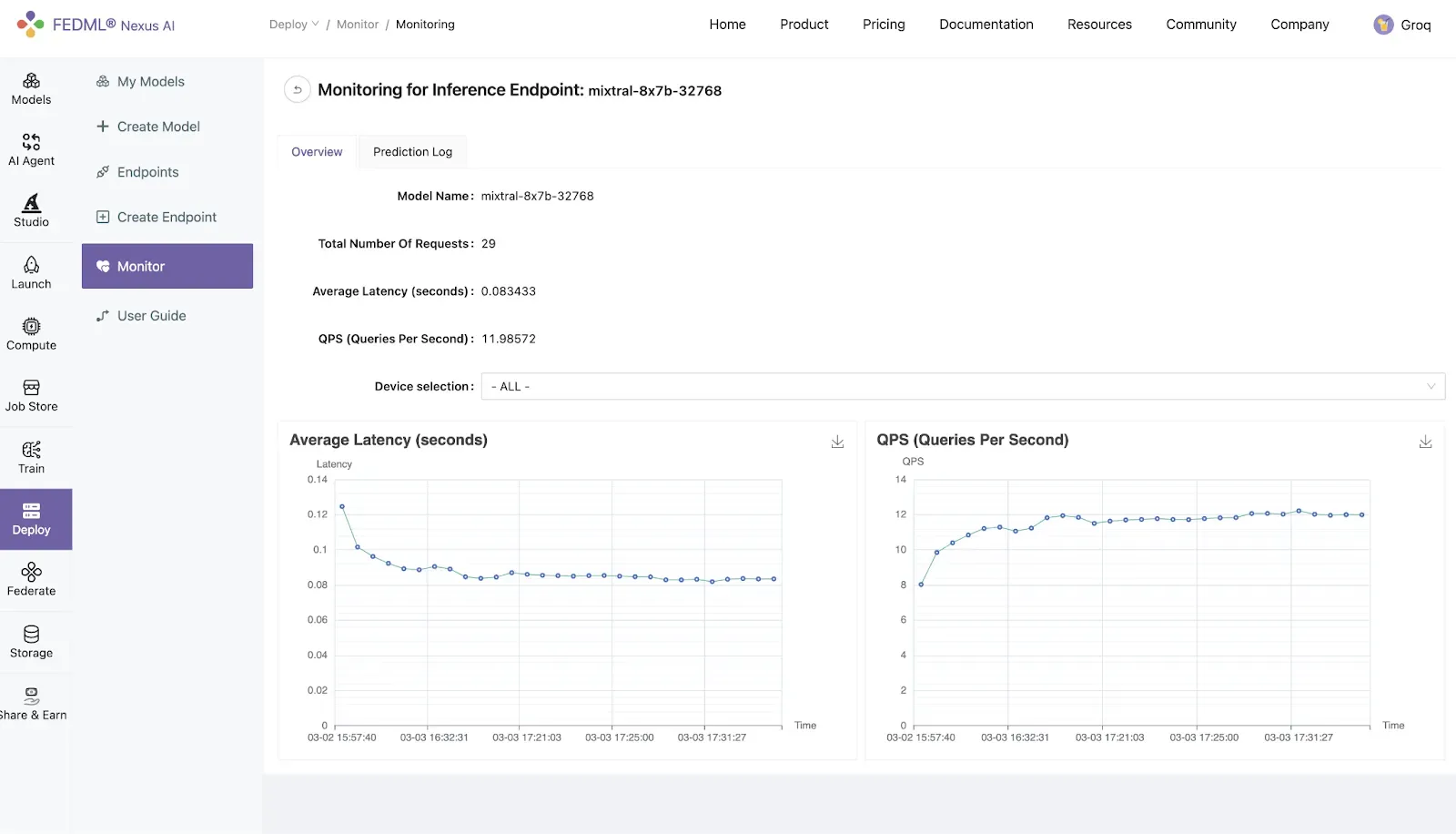

Leveraging FEDML's comprehensive end-point monitoring and management system, developers are equipped to track the performance metrics of Groq's API, focusing on key indicators such as latency and queries per second (QPS), among others. This capability allows for a detailed comparison of Groq's API performance against those of other APIs.

The figure below shows that FEDML-Groq latency is less than 100 ms, it can support higher QPSs than many other NVIDIA GPU-based solutions.

How Does the FEDML-Groq Integration Work?

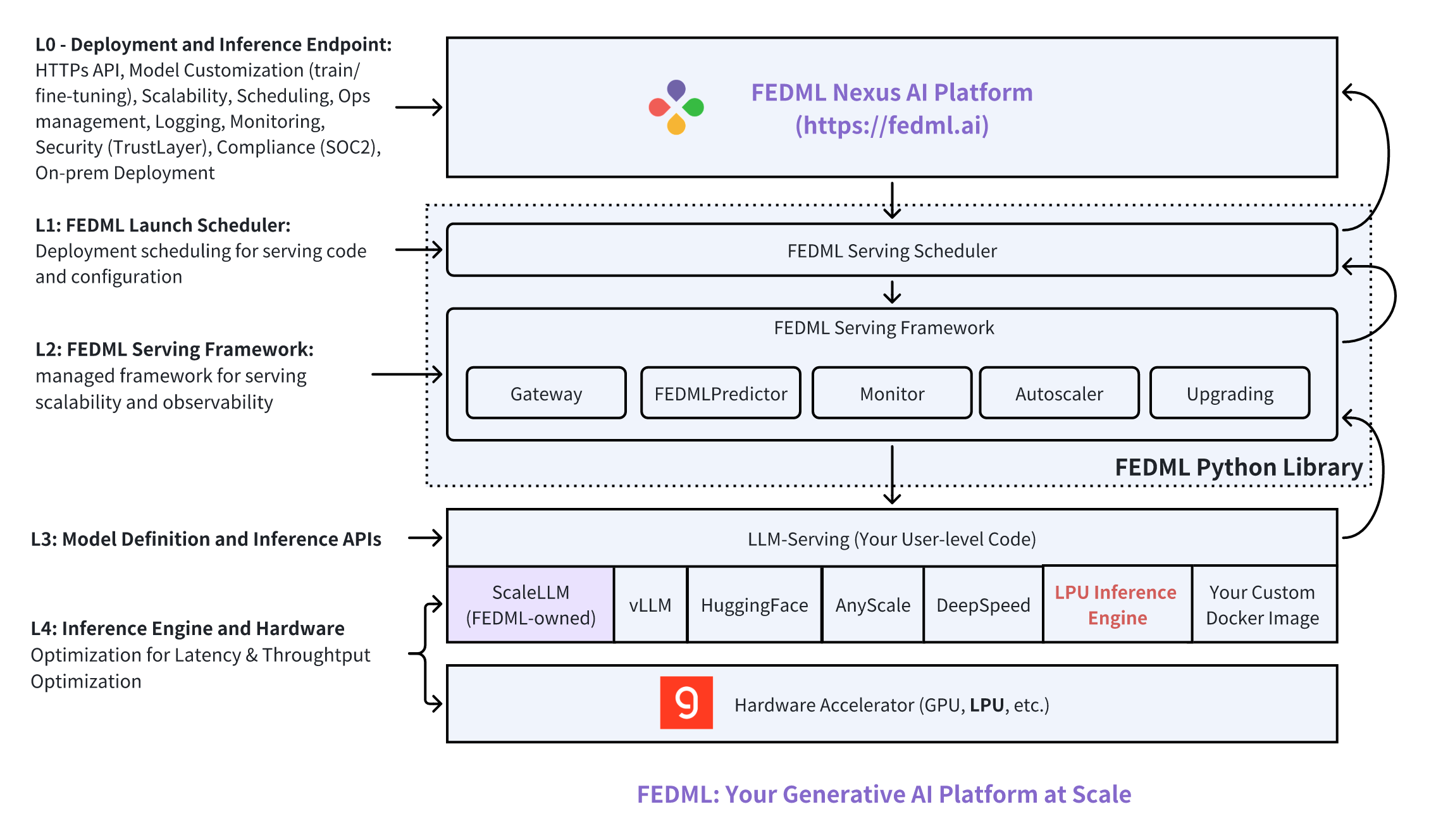

FEDML Nexus AI platform provides a full-stack of GenAI model service capabilities such as model deployment, inference, training, AI Agent, GPU job scheduling, multi-cloud/decentralized GPU management, experiment tracking, and model service monitoring. Next we introduce how FEDML-Groq integration works.

Five-layer Model Serving Architecture

The figure above shows FEDML Nexus AI's perspective of model inference service. It's divided into a 5-layer architecture:

- Layer 0 (L0) - Deployment and Inference Endpoint. This layer enables HTTPs API, model customization (train/fine-tuning), scalability, scheduling, ops management, logging, monitoring, security (e.g., trust layer for LLM), compliance (SOC2), and on-prem deployment.

- Layer 1 (L1) - FEDML Launch Scheduler. It collaborates with the L0 MLOps platform to handle deployment workflow on GPU devices for running serving code and configuration.

- Layer 2 (L2) - FEDML Serving Framework. It's a managed framework for serving scalability and observability. It will load the serving engine and user-level serving code.

- Layer 3 (L3) - Model Definition and Inference APIs. Developers can define the model architecture, the inference engine to run the model, and the related schema of the model inference APIs.

- Layer 4 (L4): Inference Engine and Hardware. This is the layer many machine learning system researchers and hardware accelerator companies work to optimize the inference latency & throughput.

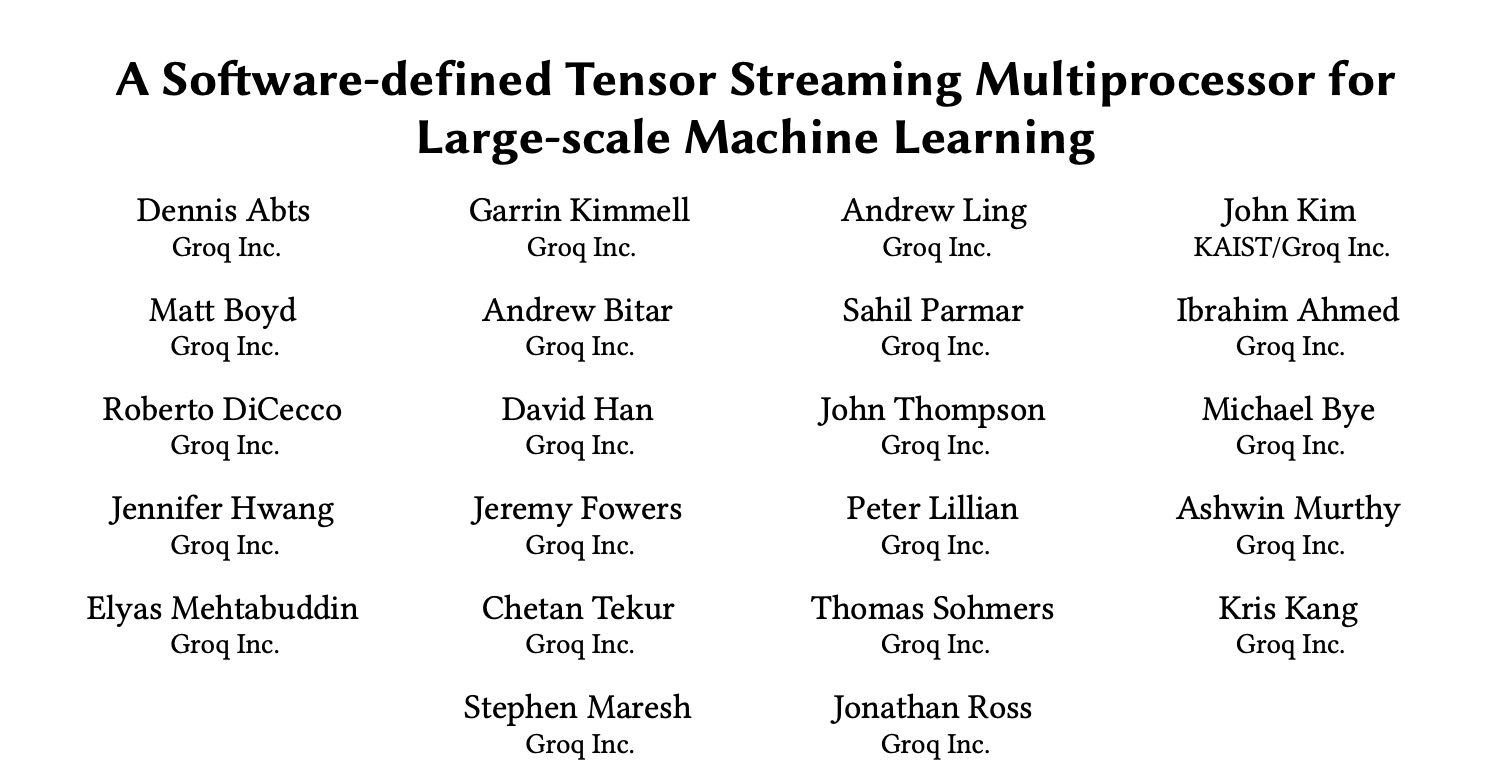

Groq's Language Processing Unit (LPU)

Groq's LPU accelerator for LLM is introduced in this paper: A Software-defined Tensor Streaming Multiprocessor forLarge-scale Machine Learning.

Groq's LPU defines the system architecture of a novel, purpose-built commercial system for scalable ML and converged HPC applications. The novel source-based, software-scheduled routing algorithm allows the automatic parallelizing compiler to load balance the global links of the Dragonfly network. This deterministic load balancing allows the compiler to schedule the physical network channels by spreading a large tensor across multiple non-minimal paths to maximize throughput, or use minimal routing to accomplish a barrier-free all-reduce with minimal end to end latency. This design has great system performance on representative workloads like distributed matrix multiplication, All-Reduce, BERT-Large, and Cholesky factorization. Then Groq LPU extends the TSP stream programming model from a single-chip to large scale system-wide determinism using a combination of hardware-alignment counters and ISA support to facilitate runtime deskew operations to provide the illusion of a globally synchronous distributed system. For more details, please read the paper.

Scale AI Agent Backed by Distributed/Cross-cloud Endpoint and Vector DB

One of the unique features in FEDML Nexus AI model serving is that a single endpoint can run across geo-distributed GPU clouds. It further scales AI Agent by enabling distributed vector DB. Such system can provide a range of benefits, from improved reliability to better performance:

- Increased Reliability and Availability. By spreading resources across multiple clouds, developers can ensure that if one provider experiences downtime, the model service can still run on the other providers. Automated failover processes ensure that traffic is redirected to operational instances in the event of a failure.

- Scalability. Cloud services typically offer the ability to scale resources up or down. Multiple providers can offer even greater flexibility and capacity. Distributing the load across different clouds can help manage traffic spikes and maintain performance.

- Performance. Proximity to users can reduce latency. By running endpoints on different clouds, developers can optimize for geographic distribution. Different clouds might offer specialized GPU types that are better suited for particular types of workloads.

- Cost Efficiency. Prices for cloud services can vary. FEDML Nexus AI uses multiple providers allows developers to take advantage of the best pricing models. Developers can bid for unused capacity at a lower price, which might be available from different providers at different times.

- Risk Management and Data Sovereignty. Distributing across different regions can mitigate risks associated with policy regulations affecting service availability. For compliance reasons, developers might need to store and process data in specific jurisdictions. Multiple clouds can help meet these requirements.

Further Advances: Customize, Deploy, and Scale

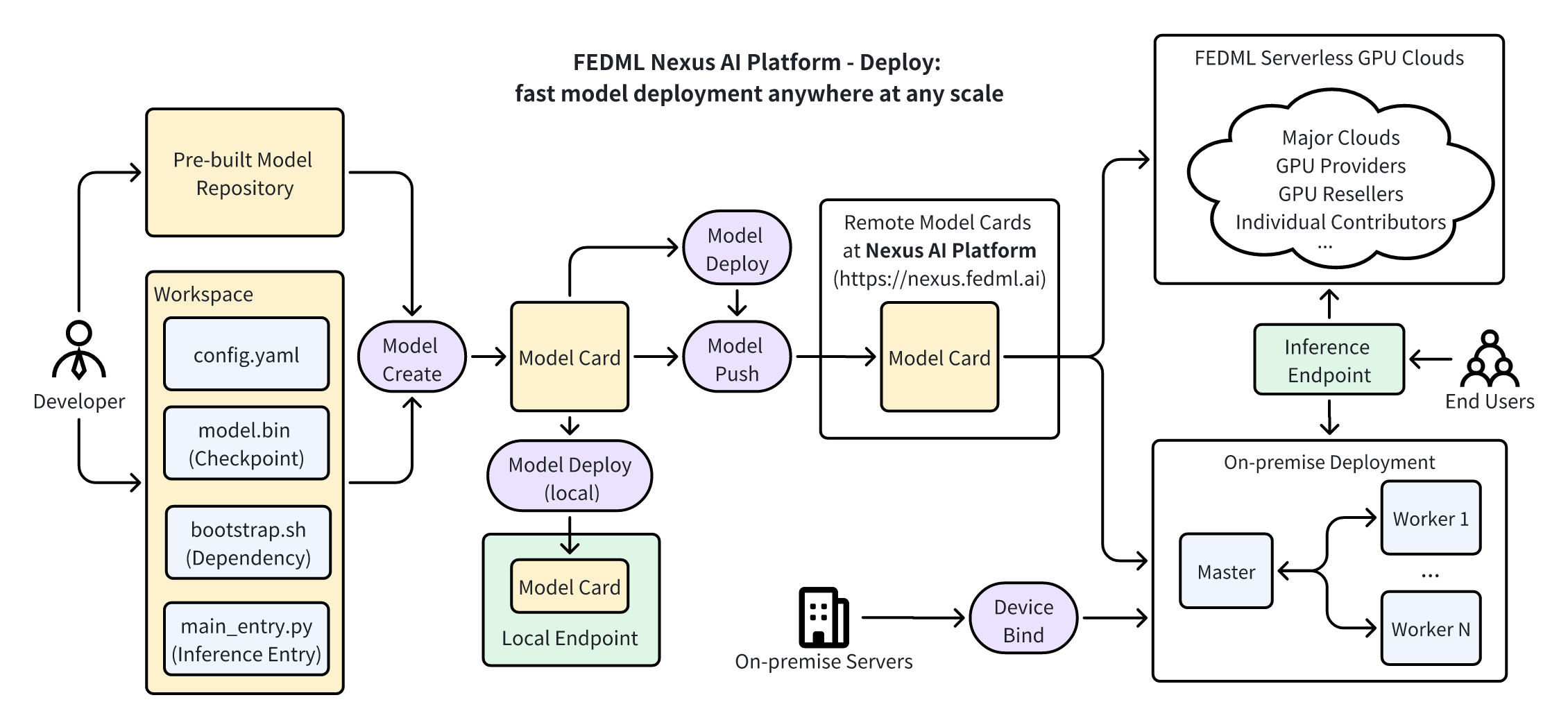

Beyond the integration of Groq inference engine and LPU acclerator for faster model endpoint and AI Agent creation, FEDML Nexus AI platform provides much further capabilities to customize, deploy, and scale any model. A specific deployment workflow is shown in the diagram above. Developers can deploy their custom docker image or just serving related python scripts into FEDML Nexus AI platform for deployment in distributed/decentralized GPU cloud. For detailed steps, please refer to https://doc.fedml.ai.

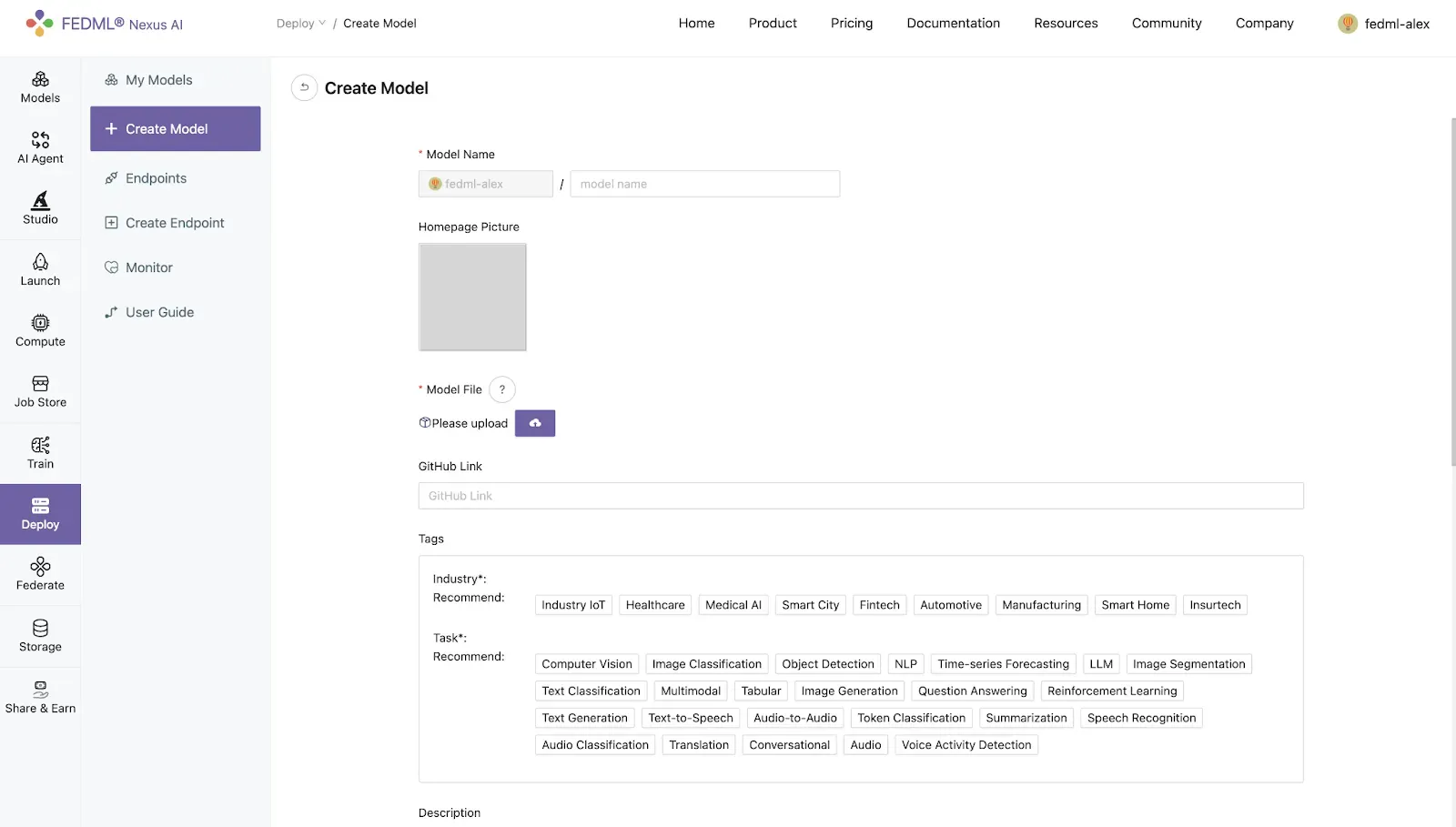

- Build a Model from Scratch or Import from the Marketplace

For more details, please see the FEDML documentation: https://doc.fedml.ai/deploy/create_model.

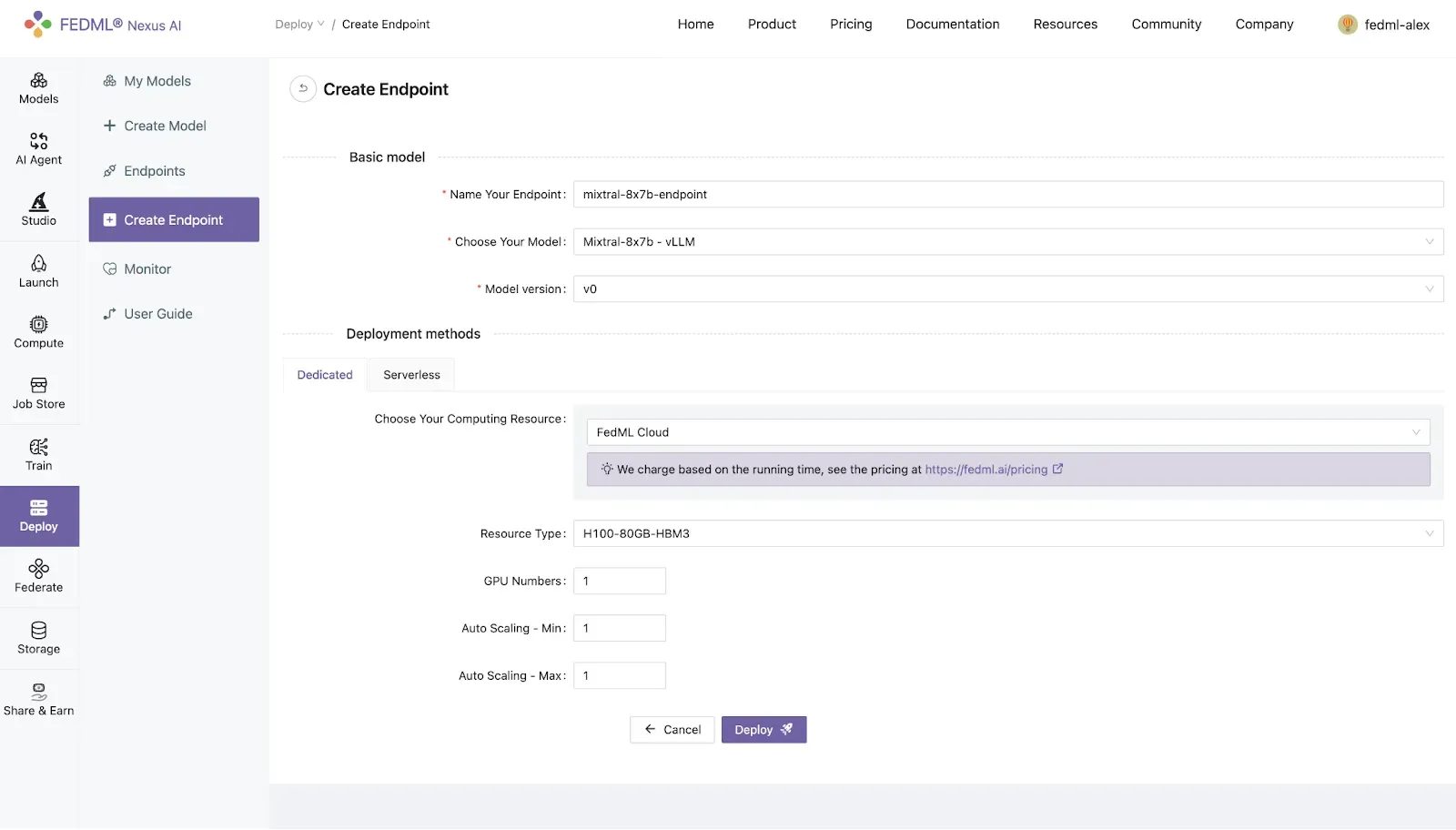

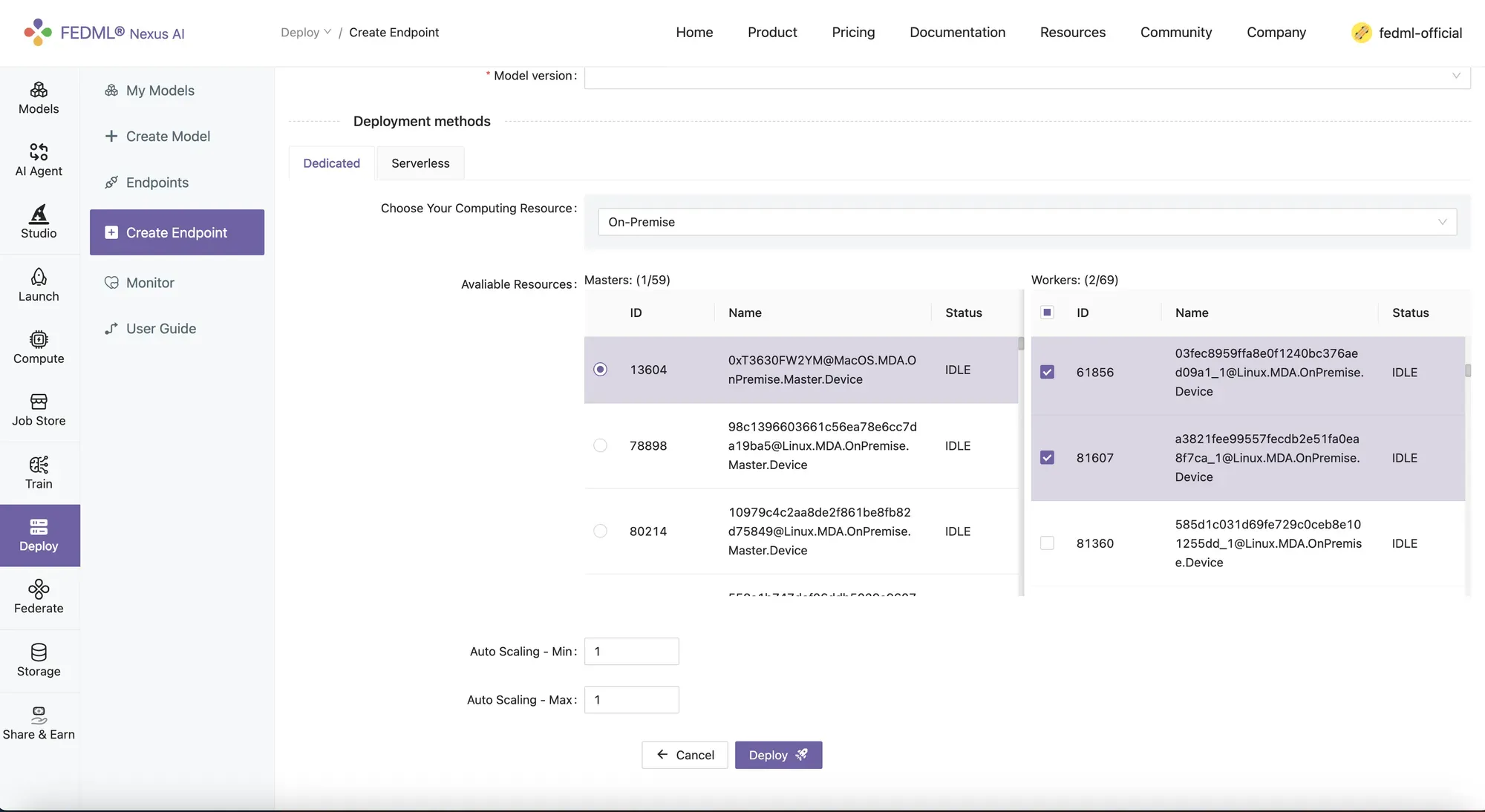

- Streamline Model Deployment: Dedicated/Serverless Endpoints with UI/CLI modes

- Running a Single Model Endpoint across Geo-distributed GPUs

When allocating on-premise resources for a specific endpoint, we can select GPU workers across geo-distributed GPUs.

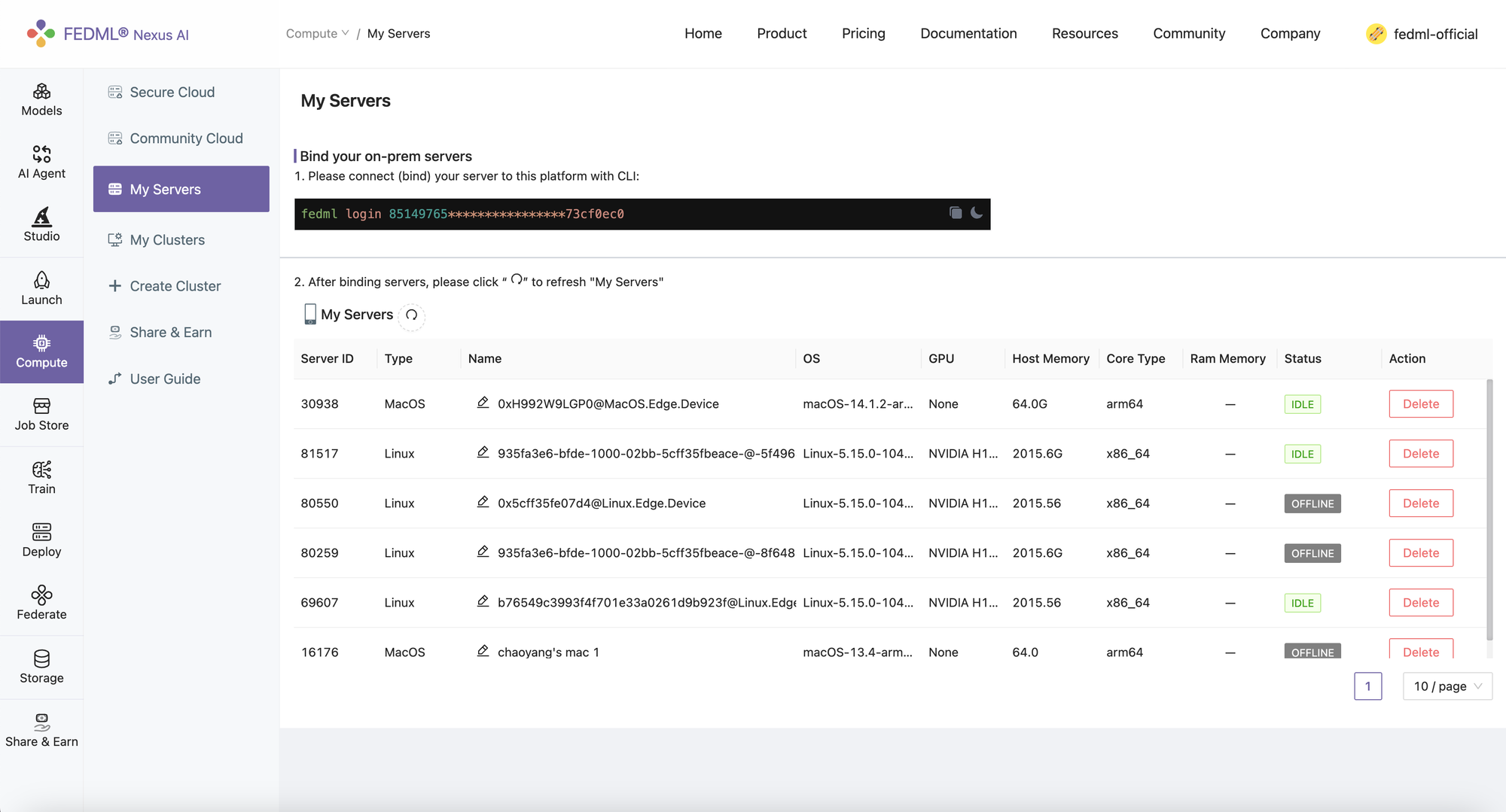

The geo-distributed GPUs can be bound by Compute -> My Servers with one-line command "fedml login <API key>". For more details, please see the FEDML documentation: https://doc.fedml.ai/deploy/deploy_on_premise

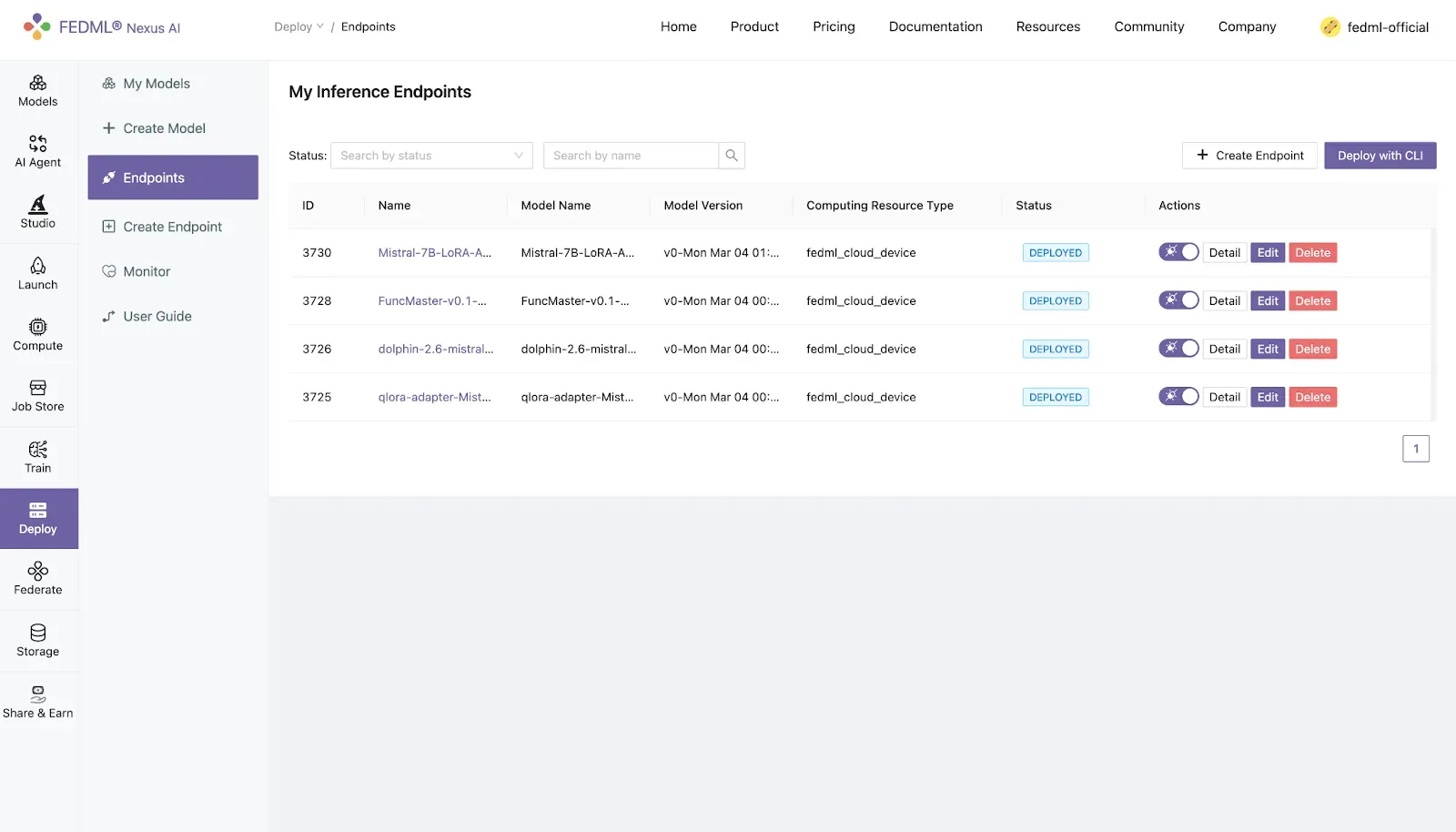

- Manage & Monitor Deployed Models.

About FEDML, Inc.

FEDML is your generative AI platfom at scale to enable developers and enterprises to build and commercialize their own generative AI applications easily, scalably, and economically. Its flagship product, FEDML Nexus AI, provides unique features in enterprise AI platforms, model deployment, model serving, AI agent APIs, launching training/Inference jobs on serverless/decentralized GPU cloud, experimental tracking for distributed training, federated learning, security, and privacy.

FEDML, Inc. was founded in February 2022. With over 4,500 platform users from 500+ universities and 100+ enterprises, FEDML is enabling organizations of all sizes to build, deploy, and commercialize their own LLMs and Al agents. The company's enterprise customers span a wide range of industries, including generative Al/LLM applications, mobile ads/recommendations, AloT(logistics/retail), healthcare, automotive, and web3. The company has raised $13.2M seed round. As a fun fact, FEDML is currently located at the Silicon Valley "Lucky building" (165 University Avenue, Palo Alto, CA), where Google, PayPal, Logitech, and many other successful companies started.